Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Comparing Normal Means: New Methods For An Old Problem : Jos e M. Bernardo Sergio P Erez

Caricato da

alrero2011Descrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Comparing Normal Means: New Methods For An Old Problem : Jos e M. Bernardo Sergio P Erez

Caricato da

alrero2011Copyright:

Formati disponibili

BAYESIAN STATISTICS 8, pp. 571576. J. M. Bernardo, M. J. Bayarri, J. O. Berger, A. P. Dawid, D. Heckerman, A. F. M. Smith and M. West (Eds.

) c Oxford University Press, 2007

Comparing Normal Means: New Methods for an Old Problem*

Universitat de Val`ncia, Spain e jose.m.bernardo@uv.es

Jose M. Bernardo

Colegio de Postgraduados, Mexico sergiop@colpos.mx

Sergio Perez

Summary Comparing the means of two normal populations is a very old problem in mathematical statistics, but there is still no consensus about its most appropriate solution. In this paper we treat the problem of comparing two normal means as a Bayesian decision problem with only two alternatives: either to accept the hypothesis that the two means are equal, or to conclude that the observed data are, under the assumed model, incompatible with that hypothesis. The combined use of an information-theory based loss function, the intrinsic discrepancy (Bernardo and Rueda, 2002), and an objective prior function, the reference prior (Bernardo, 1979; Berger and Bernardo, 1992), produces a new solution to this old problem which, for the rst time, has the invariance properties one should presumably require. Keywords and Phrases: Bayes factor, BRC, comparison of normal means, intrinsic discrepancy, KullbackLeibler divergence, precise hypothesis testing, reference prior.

1. STRUCTURE OF THE DECISION PROBLEM Precise hypothesis testing as a decision problem. Assume that available data z have been generated from an unknown element of the family of probability distributions for z Z, {pz ( | , ), , }, and suppose that it is desired to check whether or not these data may be judged to be compatible with the (null) hypothesis H0 { = 0 }. This may be treated as a decision problem with only two alternatives; a0 : to accept H0 (and work as if = 0 ) or a1 : to claim that the observed data are incompatible with H0 . Notice that, with this formulation, H0 is generally a composite hypothesis, described by the family of probability distributions

* This is a synopsis. For the full version, see Bayesian Analysis 2 (2007), 571576. Jos M. Bernardo is Professor of Statistics at the Facultad de Matemticas, Universidad e a de Valencia, 46100-Burjassot, Valencia, Spain. Dr. Sergio Prez works at the Colegio de e Postgraduados, Montecillo, 56230-Texcoco, Mexico. Work by Professor Bernardo has been partially funded with Grant MTM2006-07801 of the MEC, Spain.

572

Jos M. Bernardo and Sergio Prez e e

M0 = {pz ( | 0 , 0 ), 0 }. Simple nulls are included as a particular case where there are no nuisance parameters. Foundations dictate (see e.g., Bernardo and Smith, 1994, and references therein) that, to solve this decision problem, one must specify utility functions u{ai , (, )} for the two alternatives a0 and a1 , and a joint prior distribution (, ) for the unknown parameters (, ); then, H0 should be rejected if, and only if, Z Z [u{a1 , (, )} u{a0 , (, )}] (, | z) d d > 0,

where, using Bayes theorem, (, | z) p(z | , ) (, ) is the joint posterior which corresponds to the prior (, ). Thus, only the utilities dierence must be specied, and this may usefully be written as u{a1 , (, )} u{a0 , (, )} = {0 , (, )} u0 , where {0 , (, )} is the non-negative terminal loss suered by accepting = 0 given (, ), and u0 > 0 is the utility of accepting H0 when it is true. Hence, H0 should be rejected if, and only if, Z Z t(0 | z) = {0 , (, )} (, | z) d d > u0 ,

that is, if the posterior expected loss, the test statistic t(0 | z) is large enough. The intrinsic discrepancy loss. As one would expect, the optimal decision depends heavily on the particular loss function {0 , (, )} used. Specic problems may require specic loss functions, but conventional loss functions may be used to proceed when one does not have any particular application in mind. A common class of conventional loss function are the step loss functions. These forces the use of a non-regular spiked proper prior which places a lump of probability at = 0 and leads to rejecting H0 if, and only if, its posterior probability is too small or, equivalently, if, and only if, the Bayes factor against H0 , is suciently large. This will be appropriate wherever preferences are well described by a step loss function, and prior information is available to justify a (highly informative), spiked prior. It may be argued that many scientic applications of precise hypothesis testing fail to meet one or both of these conditions. Another example of a conventional loss function is the ubiquitous quadratic loss function. This leads to rejecting the null if, and only if, the posterior expected Euclidean distance of 0 from the true value is too large, and may safely be used with (typically improper) noninformative priors. However, as most conventional continuous loss functions, the quadratic loss depends dramatically on the particular parametrization used. But, since the model parametrization is arbitrary, the conditions to reject = 0 should be precisely the same, for any one-to-one function (), as the conditions to reject = (0 ). This requires the use of a loss function which is invariant under one-to-one reparametrizations. It may be argued (Bernardo and Rueda, 2002; Bernardo, 2005b) that a measure of the disparity between two probability distributions which may be appropriate for general use in probability and statistics is the intrinsic discrepancy, Z Z pz (z) pz (z) z {pz (), qz ()} min pz (z) log dz, pz (z) log dz , (1) qz (z) qz (z) Z Z

Comparing Normal Means

573

dened as the minimum (Kullback-Keibler) logarithmic divergence between them. This is symmetric, non-negative, and it is zero if, and only if, pz (z) = qz (z) a.e. Besides, it is invariant under one-to-one transformations of z, and it is additive Q under independent observations; thus if z = {x1 , . . . , xn }, pz (z) = n px (xi ), and i=1 Qn qz (z) = i=1 qx (xi ), then z {pz (), qz ()} = n x {px (), qx ()}. Within a parametric probability model, say {pz ( | ), }, the intrinsic discrepancy induces a loss function {0 , } = z {pz ( | 0 ), pz ( | )}, in which the loss to be suered if is replaced by 0 is not measured terms of the disparity between and 0 , but in terms of the disparity between the models labelled by and 0 . This provides a loss function which is invariant under reparametrization: for any one-to-one function = (), {0 , } = {0 , }. Moreover, one may equivalently work with sucient statistics: if t = t(z) is a sucient statistic for pz ( | ), then z {0 , )} = t {0 , }. The intrinsic loss may be safely be used with improper priors. In the context of hypothesis testing within the parametric model pz ( | , ), the intrinsic loss to be suered by replacing by 0 becomes z {H0 , (, )} inf z {pz ( | 0 , 0 ), pz ( | , )},

0

(2)

that is, the intrinsic discrepancy between the distribution pz ( | , ) which has generated the data, and the family of distributions F0 {pz ( | 0 , 0 ), 0 } which corresponds to the hypothesis H0 { = 0 } to be tested. If, as it is usually the case, the parameter space is convex, then the two minimization procedures in (2) and (1) may be interchanged (Jurez, 2005). a As it is apparent from its denition, the intrinsic loss (2) is the minimum conditional expected log-likelihood ratio (under repeated sampling) against H0 , what provides a direct calibration for its numerical values; thus, intrinsic loss values of about log(100) would indicate rather strong evidence against H0 . The Bayesian Reference Criterion (BRC). Any statistical procedure depends on the accepted assumptions, and those typically include many subjective judgements. If has become standard, however, to term objective any statistical procedure whose results only depend on the quantity of interest, the model assumed and the data obtained. The reference prior (Bernardo, 1979; Berger and Bernardo, 1992; Bernardo, 2005a), loosely dened as that prior which maximizes the missing information about the quantity of interest, provides a general solution to the problem of specifying an objective prior. See Berger (2006) for a recent analysis of this issue. The Bayesian reference criterion (Bernardo and Rueda, 2002) is the normative Bayes solution to the decision problem of hypothesis testing described above which corresponds to the use of the intrinsic loss and the reference prior. Given a parametric model {pz ( | , ), , }, this prescribes to reject the hypothesis H0 { = 0 } if, and only if, Z d(H0 | z) = ( | z) d > 0 , (3)

0

where d(H0 | z), termed the intrinsic (test) statistic, is the reference posterior expectation of the intrinsic loss z {H0 , (, )} dened by (2), and where 0 is a context dependent positive utility constant, the largest acceptable average log-likelihood ratio against H0 under repeated sampling. For scientic communication, 0 could conventionally be set to log(10) 2.3 to indicate some evidence against H0 , and to log(100) 4.6 to indicate strong evidence against H0 .

574

Jos M. Bernardo and Sergio Prez e e

2. NORMAL MEANS COMPARISON

Problem statement in the common variance case. Let available data z = {x, y}, x = {x1 , . . . , xn }, y = {y1 , . . . , ym }, consist of two random samples of possibly different sizes n and m, respectively drawn Q from N(x | x , ) and N(y | y , ), so that Q the assumed model is p(z | x , y , ) = n N(xi | x , ) m N(yj | y , ). It is j=1 i=1 desired to test H0 {x = y }, that is, whether or not these data could have been drawn from some element of the family F0 {p(z | 0 , 0 , 0 ), 0 , 0 > 0}. To implement the BRC criterion described above one should: (i) compute the intrinsic discrepancy {H0 , (x , y , )} between the family F0 which denes the hypothesis H0 and the assumed model p(z | x , y , ); (ii) determine the reference joint prior (x , y , ) of the three unknown parameters when is the quantity of interest; and (iii) derive the relevant intrinsic statistic, that is the reference posterior expectation R d(H0 | z) = 0 ( | z) d of the intrinsic discrepancy {H0 , (x , y , )}. The intrinsic loss. The (KullbackLeibler) logarithmic divergence of a normal distribution N(x | 2 , 2 ) from another normal distribution N(x | 1 , 1 ) is given by Z {2 , 2 | 1 , 1 } = N(x | 1 , 1 ) dx N(x | 2 , 2 ) 2 2 1 2 1 1 1 2 + 1 log 1 . 2 2 2 2 2 2 2 N(x | 1 , 1 ) log

(4)

Using its additive property, the (KL) logarithmic divergence of p(z | 0 , 0 , 0 ) from p(z | x , y , ) is n {0 , 0 | x , } + m {0 , 0 | y , }, which is minimized when 0 = (nx + my )/(n + m) and 0 = . Substitution yields h(n, m) 2 /4, where h(n, m) = 2nm/(m + n) is the harmonic mean of the two sample sizes, and = (x y )/ is the standardized distance between the two means. Similarly, the logarithmic divergence of p(z | x , y , ) from p(z | 0 , 0 , 0 ) is given by n {x , | 0 , 0 } + m {y , | 0 , 0 }, minimized when 0 = (nx + my )/(n + m) 2 and 0 = 2 + (x y )2 (mn)/(m + n)2 . Substitution now yields a minimum divergence (n + m)/2 log[1 + h(n, m)/(2(n + m)) 2 , which is always smaller than the minimum divergence h(n, m) 2 /4 obtained above. Therefore, the required intrinsic loss function is h(n, m) 2 n+m log 1 + , (5) z {H0 , (x , y , )} = 2 2(n + m) a logarithmic transformation of the standardized distance = (x y )/ between the two means. The intrinsic loss (5) increases linearly with the total sample size n + m, and it is essentially quadratic in in a neighbourhood of zero, but it becomes concave for | | > (k + 1)/ k, where k = n/m is the ratio of the two sample sizes, an eminently reasonable behaviour which conventional loss functions do not have. For equal sample sizes, m = n, this reduces to n log[1+2 /4] a linear function of the sample size n, which behaves as 2 /4 in a neighbourhood of the origin, but becomes concave for | | > 2. Reference analysis. The intrinsic loss (5) is a piecewise invertible function of , the standardized dierence of the means. Consequently, the required objective prior is the joint reference prior function (x , y , ) when the standardized dierence of the means, = (x y )/, is the quantity of interest. This may easily be obtained

Comparing Normal Means

575

p using the orthogonal parametrization {, 1 , 2 }, with 1 = 2(m + n)2 + m n 2 , and 2 = y + n /(n + m). In the original parametrization the required reference prior is found to be 2 !1/2 h(n, m) 1 x y . (x , y , ) = 2 1 + (6) 4(m + n) By Bayes theorem, the posterior is (x , y , | z) p(z | x , y , ) (x , y , ). Changing variables to {, y , }, and integrating out y and , produces the (marginal) reference posterior density of the quantity of interest ( | z) = ( | t, m, n) ! r 1/2 h(n, m) h(n, m) 2 NcSt t , n + m 2 1+ 4(m + n) 2 where t= xy , p s 2/h(n, m) s2 = n s2 + m s2 , x y n+m2

(7)

and NcSt( | , ) is the density of a noncentral Student distribution with noncentrality parameter and degrees of freedom. The reference posterior (7) is proper provided n 1, m 1, and n + m 3. For further details, see Prez (2005). e The reference posterior (7) has the form ( | t, n, m) () p(t | , n, m), where p(t | x , y , , m, m) = p(t | , n, m) is the sampling distribution of t. Thus, the reference prior is consistent under marginalization (cf. Dawid, Stone and Zidek, 1973). The intrinsic statistic. The reference posterior for may now be used to obtain the required intrinsic test statistic. Indeed, substituting into (5) yields Z h(n, m) 2 n+m log 1 + ( | t, m, n) d, (8) d(H0 | z) = d(H0 | t, m, n) = 2 2(m + n) 0 where ( | t, m, n) is given by (7). This has no simple analytical expression but may easily be obtained by one-dimensional numerical integration. Example. The derivation of the appropriate reference prior allows us to draw precise conclusions even when data are extremely scarce. As an illustration, consider a (minimal) sample of three observations with x = {4, 6} and = {0}, so that n = 2, y m = 1, x = 5, y = 0, s = 2, h(n, m) = 4/3 and t = 5/ 3. If may be veried numerically that the reference posterior probability that < 0 is Z 0 Pr[ < 0 | t, h, n] = ( | t, m, n) d = 0.0438,

directly suggesting some (mild) evidence against = 0 and, hence, against x = y . On the other hand, using the formal procedure described above, the numerical value of intrinsic statistic to test H0 {x = y } is Z 2 3 log 1 + 2 ( | t, m, n) d = 1.193 = log[6.776]. d(H0 | t, m, n) = 2 9 0

576

Jos M. Bernardo and Sergio Prez e e

Thus, given the available data, the expected value of the average (under repeated sampling) of the log-likelihood ratio against H0 is 1.193 (so that likelihood ratios may be expected to be about 6.8 against H0 ), which provides a precise measure of the available evidence against the hypothesis H0 {x = y }. This (moderate) evidence against H0 is not captured by the conventional frequentist analysis of this problem. Indeed, since the sampling distribution of t under H0 is a standard Student distribution with n + m 2 degrees of freedom, the p-value which corresponds to the two-sided test for H0 is 2(1 Tm+n2 {|t|}), where T is the cumulative distribution function of an Student distribution with degrees of freedom (see, e.g., DeGroot and Schervish, 2002, Section 8.6). In this case, this produces a p-value of 0.21 which, contrary to the preceding analysis, suggests lack of sucient evidence in the data against H0 . Further results. The full version of this paper (Bernardo and Prez, 2007) contains e analytic asymptotic approximations to the intrinsic test statistic (8), analyzes the behaviour of the proposed procedure under repeated sampling (both when H0 is true and when it is false), and discusses its generalization to the case of possibly dierent variances. REFERENCES

Berger, J. O. (2006). The case for objective Bayesian analysis. Bayesian Analysis 1, 385402 and 457464 (with discussion). Berger, J. O. and Bernardo, J. M. (1992). On the development of reference priors. Bayesian Statistics 4 (J. M. Bernardo, J. O. Berger, A. P. Dawid and A. F. M. Smith, eds.) Oxford: University Press, 6177 (with discussion). Bernardo, J. M. (1979). Reference posterior distributions for Bayesian inference. J. Roy. Statist. Soc. B 41,113147. Bernardo, J. M. (2005a). Reference analysis. Handbook of Statistics 25, D. K. Dey and C. R. Rao, (eds.) Amsterdam: Elsevier1790. Bernardo, J. M. (2005b). Intrinsic credible regions: An objective Bayesian approach to interval estimation. Test 14, 317384. Bernardo, J. M. and Prez, S. (2007). Comparing normal means: New methods for an old e problem. Bayesian Analysis 2, 4548. Bernardo, J. M. and Rueda, R. (2002). Bayesian hypothesis testing: A reference approach. Internat. Statist. Rev. 70, 351372. Bernardo, J. M. and Smith, A. F. M. (1994). Bayesian Theory. Chichester: Wiley, (2nd edition to appear in 2008). DeGroot, M. H. and Schervish, M. J. (2002). Probability and Statistics, 3rd ed. Reading, MA: Addison-Wesley. Dawid, A. P., Stone, M. and Zidek, J. V. (1973). Marginalization paradoxes in Bayesian and structural inference J. Roy. Statist. Soc. B 35,189233 (with discussion). Jurez, M. A. (2005). Normal correlation: An objective Bayesian approach. Tech. Rep., CRiSM a 05-15, University of Warwick, UK. Prez, S. (2005). Objective Bayesian Methods for Mean Comparison. Ph.D. Thesis, Unie versidad de Valencia, Spain.

Potrebbero piacerti anche

- Models For Count Data With Overdispersion: 1 Extra-Poisson VariationDocumento7 pagineModels For Count Data With Overdispersion: 1 Extra-Poisson VariationMilkha SinghNessuna valutazione finora

- Carlo Part 6 - Hierarchical Bayesian Modeling Lecture - Notes - 6Documento12 pagineCarlo Part 6 - Hierarchical Bayesian Modeling Lecture - Notes - 6Mark AlexandreNessuna valutazione finora

- Statistical Undecidability - SSRN-Id1691165Documento4 pagineStatistical Undecidability - SSRN-Id1691165adeka1Nessuna valutazione finora

- A Bayesian Test For Excess Zeros in A Zero-Inflated Power Series DistributionDocumento17 pagineA Bayesian Test For Excess Zeros in A Zero-Inflated Power Series Distributionእስክንድር ተፈሪNessuna valutazione finora

- DeepayanSarkar BayesianDocumento152 pagineDeepayanSarkar BayesianPhat NguyenNessuna valutazione finora

- Score Tests For Heterogeneity and Overdispersion in Zero-Inflated Poisson and Binomial Regression ModelsDocumento16 pagineScore Tests For Heterogeneity and Overdispersion in Zero-Inflated Poisson and Binomial Regression Modelstamer El-AzabNessuna valutazione finora

- ECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1Documento7 pagineECE523 Engineering Applications of Machine Learning and Data Analytics - Bayes and Risk - 1wandalexNessuna valutazione finora

- 07 Fuzzyp-Valueintestingfuzzyhypotheseswithcrispdata - StatisticalPapersDocumento19 pagine07 Fuzzyp-Valueintestingfuzzyhypotheseswithcrispdata - StatisticalPapersZULFATIN NAFISAHNessuna valutazione finora

- Compound Distributions and Mixture Distributions: ParametersDocumento5 pagineCompound Distributions and Mixture Distributions: ParametersDennis ClasonNessuna valutazione finora

- Testing Non-Identifying RestrictionsDocumento26 pagineTesting Non-Identifying Restrictionskarun3kumarNessuna valutazione finora

- Phar 2813 RevisionDocumento19 paginePhar 2813 RevisionTrung NguyenNessuna valutazione finora

- Probability Probability Distribution Function Probability Density Function Random Variable Bayes' Rule Gaussian DistributionDocumento26 pagineProbability Probability Distribution Function Probability Density Function Random Variable Bayes' Rule Gaussian Distributionskumar165Nessuna valutazione finora

- A Step by Step Guide To Bi-Gaussian Disjunctive KrigingDocumento15 pagineA Step by Step Guide To Bi-Gaussian Disjunctive KrigingThyago OliveiraNessuna valutazione finora

- Lion 5 PaperDocumento15 pagineLion 5 Papermilp2Nessuna valutazione finora

- REU Project: Topics in Probability: Trevor Davis August 14, 2006Documento12 pagineREU Project: Topics in Probability: Trevor Davis August 14, 2006Epic WinNessuna valutazione finora

- Destercke 22 ADocumento11 pagineDestercke 22 AleftyjoyNessuna valutazione finora

- Multivariate Analysis From A Statistical Point of View: K.S. CranmerDocumento4 pagineMultivariate Analysis From A Statistical Point of View: K.S. CranmerBhawani SinghNessuna valutazione finora

- Modern Bayesian EconometricsDocumento100 pagineModern Bayesian Econometricsseph8765Nessuna valutazione finora

- Bayesian Structural Source Identification Using Generalized Gaussian PriorsDocumento8 pagineBayesian Structural Source Identification Using Generalized Gaussian PriorsMathieu AucejoNessuna valutazione finora

- Introduction To Bayesian Methods With An ExampleDocumento25 pagineIntroduction To Bayesian Methods With An ExampleLucas RobertoNessuna valutazione finora

- Math Supplement PDFDocumento17 pagineMath Supplement PDFRajat GargNessuna valutazione finora

- Agresti 9 Notes 2012Documento8 pagineAgresti 9 Notes 2012thanhtra023Nessuna valutazione finora

- Extended Bayesian Information Criteria For Model Selection With Large Model SpacesDocumento27 pagineExtended Bayesian Information Criteria For Model Selection With Large Model SpacesJuan CarNessuna valutazione finora

- Block 4 ST3189Documento25 pagineBlock 4 ST3189PeterParker1983Nessuna valutazione finora

- Pham Gia2006Documento20 paginePham Gia2006Hứa Tấn SangNessuna valutazione finora

- Diseño ExperimentosDocumento16 pagineDiseño Experimentosvsuarezf2732Nessuna valutazione finora

- 1983 S Baker R D Cousins-NIM 221 437-442Documento6 pagine1983 S Baker R D Cousins-NIM 221 437-442shusakuNessuna valutazione finora

- Statistical Inference Based On Pooled Data: A Moment-Based Estimating Equation ApproachDocumento23 pagineStatistical Inference Based On Pooled Data: A Moment-Based Estimating Equation ApproachdinadinicNessuna valutazione finora

- Downloadartificial Intelligence - Unit 3Documento20 pagineDownloadartificial Intelligence - Unit 3blazeNessuna valutazione finora

- f (x) ≥0, Σf (x) =1. We can describe a discrete probability distribution with a table, graph,Documento4 paginef (x) ≥0, Σf (x) =1. We can describe a discrete probability distribution with a table, graph,missy74Nessuna valutazione finora

- Sparse Precision Matrix Estimation Via Lasso Penalized D-Trace LossDocumento18 pagineSparse Precision Matrix Estimation Via Lasso Penalized D-Trace LossReshma KhemchandaniNessuna valutazione finora

- Bayes' Estimators: The MethodDocumento7 pagineBayes' Estimators: The MethodsammittalNessuna valutazione finora

- Random Variable & Probability Distribution: Third WeekDocumento51 pagineRandom Variable & Probability Distribution: Third WeekBrigitta AngelinaNessuna valutazione finora

- On+Fuzzy+$h$ Ideals+of+HemiringsDocumento9 pagineOn+Fuzzy+$h$ Ideals+of+HemiringsmsmramansrimathiNessuna valutazione finora

- Quality Control: Methodology and ApplicationsDocumento12 pagineQuality Control: Methodology and Applicationsghgh140Nessuna valutazione finora

- Run-Sort-Rerun: Escaping Batch Size Limitations in Sliced Wasserstein Generative ModelsDocumento11 pagineRun-Sort-Rerun: Escaping Batch Size Limitations in Sliced Wasserstein Generative ModelsAni SafitriNessuna valutazione finora

- Statistics For Data Science: Topic: Hypothesis TestDocumento52 pagineStatistics For Data Science: Topic: Hypothesis TestBill FASSINOUNessuna valutazione finora

- An Introduction To Objective Bayesian Statistics PDFDocumento69 pagineAn Introduction To Objective Bayesian Statistics PDFWaterloo Ferreira da SilvaNessuna valutazione finora

- Stuart 81Documento25 pagineStuart 81Sebastian CulquiNessuna valutazione finora

- On Lower Bounds For Statistical Learning TheoryDocumento17 pagineOn Lower Bounds For Statistical Learning TheoryBill PetrieNessuna valutazione finora

- MIT18.650. Statistics For Applications Fall 2016. Problem Set 3Documento3 pagineMIT18.650. Statistics For Applications Fall 2016. Problem Set 3yilvasNessuna valutazione finora

- Pattern Basics and Related OperationsDocumento21 paginePattern Basics and Related OperationsSri VatsaanNessuna valutazione finora

- An Uniformly Minimum Variance Unbiased Point Estimator Using Fuzzy ObservationsDocumento11 pagineAn Uniformly Minimum Variance Unbiased Point Estimator Using Fuzzy ObservationsujwalNessuna valutazione finora

- Information Loss in Black Holes And/Or Conscious Beings?Documento10 pagineInformation Loss in Black Holes And/Or Conscious Beings?Eduardo GallardoNessuna valutazione finora

- MIT14 382S17 Lec6Documento26 pagineMIT14 382S17 Lec6jarod_kyleNessuna valutazione finora

- The Bayesian Approach To Parameter EstimationDocumento10 pagineThe Bayesian Approach To Parameter EstimationsudhanjayaNessuna valutazione finora

- Towards A Unified Definition of Maximum Likelihood: Key AndphrasesDocumento11 pagineTowards A Unified Definition of Maximum Likelihood: Key AndphrasesSonya CampbellNessuna valutazione finora

- Hypothesis Testing ReviewDocumento5 pagineHypothesis Testing ReviewhalleyworldNessuna valutazione finora

- Notes 2 BayesianStatisticsDocumento6 pagineNotes 2 BayesianStatisticsAtonu Tanvir HossainNessuna valutazione finora

- Perspectiva Estadística de La Homología Persistente.Documento26 paginePerspectiva Estadística de La Homología Persistente.ernesto tamporaNessuna valutazione finora

- Berger Peric Chi Wiley 3Documento18 pagineBerger Peric Chi Wiley 3camilo sotoNessuna valutazione finora

- Extreme Value ConditionsDocumento18 pagineExtreme Value ConditionsLizzy BordenNessuna valutazione finora

- AIML-Unit 5 NotesDocumento45 pagineAIML-Unit 5 NotesHarshithaNessuna valutazione finora

- 2014 Greenbelt BokDocumento8 pagine2014 Greenbelt BokKhyle Laurenz DuroNessuna valutazione finora

- Statistical Data Analysis: PH4515: 1 Course StructureDocumento5 pagineStatistical Data Analysis: PH4515: 1 Course StructurePhD LIVENessuna valutazione finora

- 3 Bayesian Deep LearningDocumento33 pagine3 Bayesian Deep LearningNishanth ManikandanNessuna valutazione finora

- A Beginner's Notes On Bayesian Econometrics (Art)Documento21 pagineA Beginner's Notes On Bayesian Econometrics (Art)amerdNessuna valutazione finora

- AppendixDocumento12 pagineAppendixbewareofdwarvesNessuna valutazione finora

- Analysis of Variance ANOVADocumento3 pagineAnalysis of Variance ANOVAnorhanifah matanogNessuna valutazione finora

- BUSI1013 - Statistics For Business Winter 2021 Assignment 3 UNITS 5, 7 and 8 Due Date: Sunday February 28, 2021 11:00 PM PT NAME: - STUDENT IDDocumento13 pagineBUSI1013 - Statistics For Business Winter 2021 Assignment 3 UNITS 5, 7 and 8 Due Date: Sunday February 28, 2021 11:00 PM PT NAME: - STUDENT IDravneetNessuna valutazione finora

- Econometrics2006 PDFDocumento196 pagineEconometrics2006 PDFAmz 1Nessuna valutazione finora

- 3.0 ErrorVar and OLSvar-1Documento42 pagine3.0 ErrorVar and OLSvar-1Malik MahadNessuna valutazione finora

- Parametric TestDocumento2 pagineParametric TestPankaj2cNessuna valutazione finora

- Queueing TheoryDocumento14 pagineQueueing TheorympjuserNessuna valutazione finora

- PX X PX X X: F71Sm Statistical Methods RJG Tutorial On 4 Special DistributionsDocumento5 paginePX X PX X X: F71Sm Statistical Methods RJG Tutorial On 4 Special Distributionswill bNessuna valutazione finora

- Data Analysis Using Spss T Test 1224391361027694 8Documento24 pagineData Analysis Using Spss T Test 1224391361027694 8Rahman SurkhyNessuna valutazione finora

- Statistical symbols & probability symbols (μ,σ,..Documento2 pagineStatistical symbols & probability symbols (μ,σ,..John Bond100% (1)

- V47i01 PDFDocumento38 pagineV47i01 PDFzibunansNessuna valutazione finora

- Binomial DistributionDocumento9 pagineBinomial DistributionMaqsood IqbalNessuna valutazione finora

- Statistical Soup - ANOVA, ANCOVA, MANOVA, & MANCOVA - Stats Make Me Cry ConsultingDocumento3 pagineStatistical Soup - ANOVA, ANCOVA, MANOVA, & MANCOVA - Stats Make Me Cry ConsultingsapaNessuna valutazione finora

- ENGN 3226 Digital Communications Problem Set #8 Block Codes: Australian National University Department of EngineeringDocumento13 pagineENGN 3226 Digital Communications Problem Set #8 Block Codes: Australian National University Department of EngineeringbiswajitntpcNessuna valutazione finora

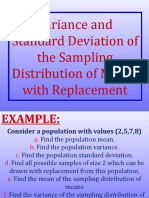

- Variance and Standard Deviation of The Sampling Distribution of Means With ReplacementDocumento33 pagineVariance and Standard Deviation of The Sampling Distribution of Means With ReplacementcedrickNessuna valutazione finora

- 6.5 Checkpoint Reliability and MaintenanceDocumento5 pagine6.5 Checkpoint Reliability and MaintenanceherrajohnNessuna valutazione finora

- MT427 Notebook 4 Fall 2013/2014: Prepared by Professor Jenny BaglivoDocumento27 pagineMT427 Notebook 4 Fall 2013/2014: Prepared by Professor Jenny BaglivoOmar Olvera GNessuna valutazione finora

- MSEDocumento3 pagineMSEAjay BarwalNessuna valutazione finora

- Data PreparationDocumento17 pagineData PreparationJoyce ChoyNessuna valutazione finora

- Quiz 2 CH 5 7 9 7.14.19Documento7 pagineQuiz 2 CH 5 7 9 7.14.19Kevin NyasogoNessuna valutazione finora

- Theil ProcedureDocumento9 pagineTheil ProcedureVikasNessuna valutazione finora

- 6.1 There Is An Urn Containing 9 Balls, Which Can Be Either Green or Red. The Number of Red Balls in TheDocumento37 pagine6.1 There Is An Urn Containing 9 Balls, Which Can Be Either Green or Red. The Number of Red Balls in TheCorneliu SurlaNessuna valutazione finora

- 2010 Moderation SEM LinDocumento18 pagine2010 Moderation SEM LinUsman BaigNessuna valutazione finora

- Appendix B Probability 2015Documento41 pagineAppendix B Probability 2015SydneyNessuna valutazione finora

- Probability LectureNotesDocumento16 pagineProbability LectureNotesbishu_iitNessuna valutazione finora

- Statistical Inference For Decision MakingDocumento7 pagineStatistical Inference For Decision MakingNitin AmesurNessuna valutazione finora

- Improving Short Term Load Forecast Accuracy Via Combining Sister ForecastsDocumento17 pagineImproving Short Term Load Forecast Accuracy Via Combining Sister Forecastsjbsimha3629Nessuna valutazione finora

- 4.1 Multiple Choice: Chapter 4 Linear Regression With One RegressorDocumento33 pagine4.1 Multiple Choice: Chapter 4 Linear Regression With One Regressordaddy's cockNessuna valutazione finora

- Reporting MANOVADocumento20 pagineReporting MANOVAnaseemhyderNessuna valutazione finora

- Panel Data: Fixed and Random Effects: I1 0 I1 0 I I1Documento8 paginePanel Data: Fixed and Random Effects: I1 0 I1 0 I I1Waqar HassanNessuna valutazione finora

- IASSC ICBB Question AnswerDocumento10 pagineIASSC ICBB Question AnswerEtienne VeronNessuna valutazione finora