Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Discriminant Analysis: 5.1 The Maximum Likelihood (ML) Rule

Caricato da

George WangDescrizione originale:

Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Discriminant Analysis: 5.1 The Maximum Likelihood (ML) Rule

Caricato da

George WangCopyright:

Formati disponibili

5.

Discriminant Analysis

Given k populations (groups)

1

; :::;

k

; we suppose that an individual from

j

has p.d.f. f

j

(x)

for a set of p measurement x.

The purpose of discriminant analysis is to allocate an individual to one of the groups

j

on

the basis of x, making as few "mistakes" as possible. For example a patient presents at a doctors

surgery with a set of symptom x. The symptoms suggest a number of posible disease groups

j

to which the patient might belong. What is the most likely diagnosis?

The aim initially is to nd a partition of R

p

into disjoint regions R

1

; :::; R

k

together with a

decision rule

x 2 R

j

=allocate x to

j

The decision rule will be more accurate if "

j

has most of its probability concentrated in R

j

"

for each j:

5.1 The maximum likelihood (ML) rule

Allocate x to population

j

that gives the largest likelihood to x. Choose j by

L

j

(x) = max

1ik

L

i

(x)

(break ties arbitrarily).

Result 1

If

i

is the multivariate normal (MVN) population N

p

(

i

; ) for i = 1; :::; k; the ML rule

allocates x to population

i

that minimize the Mahalanobis distance between x and

i

:

Proof

L

i

(x) = [2[

1

2

exp

_

1

2

(x

i

)

T

1

(x

i

)

_

so the likelihood is maximized when the exponent is minimized.

Result 2

When k = 2 the ML rule allocates x to

1

if

d

T

(x ) > 0 (5.1)

where d =

1

(

1

2

) and =

1

2

(

1

+

2

) and to

2

otherwise.

Proof

For the two group case, the ML rule is to allocate x to

1

if

(x

1

)

T

1

(x

1

) < (x

2

)

T

1

(x

2

)

1

which reduces to

2d

T

x >

1

2

= (

1

2

)

T

1

(

1

+

2

)

= d

T

(

1

+

2

)

Hence the result. The function

h(x) = (

1

2

)

T

1

_

x

1

2

(

1

+

2

)

_

(5.2)

is known as the discriminant function (DF). In this case the DF is linear in x.

5.2 Sample ML rule

In practice

1

;

2

; are estimated by, respectively x

1

; x

2

; S

P

where S

P

is the pooled (unbiased)

estimator of covariance matrix.

Example

The eminent statistician R.A. Fisher took measurements on samples of size 50 of 4 types of

iris. Two of the variables: x

1

= sepal length and x

2

= sepal width gave the following data on

species I and II:

x

1

=

_

5:0

3:4

_

x

2

=

_

6:0

2:8

_

S

1

=

_

:12 :10

:10 :14

_

S

2

=

_

:26 :08

:08 :10

_

(The data have been rounded for clarity).

S

p

=

50S

1

+ 50S

2

98

=

_

0:19 0:09

0:09 0:12

_

Hence

d = S

1

p

( x

1

x

2

)

=

_

0:19 0:09

0:09 0:12

_

1

_

1:0

0:6

_

=

_

11:4

14:1

_

=

1

2

( x

1

+ x

2

) =

_

5:5

3:1

_

giving the rule:

Allocate x to

1

if

11:4 (x

1

5:5) + 14:1 (x

2

3:1) > 0

11:4x

1

+ 14:1x

2

+ 19:0 > 0

2

5.3 Misclassication probabilities

The misclassication probabilities p

ij

dened as

p

ij

= Pr [Allocate to

i

when in fact from

j

]

form a k k matrix, of which the diagonal elements p

ii

are a measure of the classiers accuracy.

For the case k = 2

p

12

= Pr [h(x) > 0 [

2

]

Since h(x) = d

T

(x ) is a linear compound of x it has a (univariate) normal distribution.

Given that x

2

:-

E[h(x)] = d

T

_

1

2

(

1

+

2

)

_

=

1

2

d

T

(

2

1

)

=

1

2

2

where

2

= (

2

1

)

T

1

(

2

1

) is the Mahalanobis distance between

2

and

1

:

The variance of h(x) is

d

T

d = (

2

1

)

T

1

(

2

1

)

= (

2

1

)

T

1

(

2

1

)

=

2

p

12

= Pr [h(x) > 0]

= Pr

_

h(x) +

1

2

>

1

2

_

(1)

= Pr

_

Z >

1

2

_

= 1

_

1

2

_

(5.3)

=

_

1

2

_

(2)

By symmetry if we write =

12

the misclassication rate p

21

=

1

2

(

21

) and since

12

=

21

we have

p

12

= p

21

Example (contd.)

We can estimate the misclassication probability from the sample Mahalanobis distance between

x

2

and x

1

3

D

2

= ( x

2

x

1

)

T

S

1

p

( x

2

x

1

)

=

_

1:0 0:6

_

11:4

14:1

_

19:9

1

2

D

_

= (2:23)

= 0:013

The misclassication rate is 1.3%.

5.4 Optimality of ML rule

We can show that the ML rule minimizes the probability of misclassication if an individual is a

priori equally likely to belong to any population.

Let M be the event of a misclassication and consider a decision rule (x) represented as

follows:

i

(x) =

_

1 if x is assigned to

i

0 otherwise

i.e. = (

1

;

2

; :::;

k

) is a 0-1 vector everywhere in the space of x and

i

(x) = 1 for x R

i

:

Recall that the classier assigns x to

i

if x R

i

:

The ML rule is represented as

ML

i

(x) =

_

1 if f

i

(x) _ f

k

(x) k ,= i

0 otherwise

Ties can be ignored, arbitrarily decided, or randomized by allowing

i

=

1

t

if t populations

(likelihoods) are tied.

The misclassication probabilities are

p

ij

= Pr [Allocate to

i

when in fact from

j

]

= Pr (

i

= 1[x

j

)

=

_

i

(x) f

j

(x) dx

=

_

R

i

f

j

(x) dx (3)

where x is equally likely to come from

j

(j = 1; :::; k) :

The total probability of misclassication is

Pr (M) =

k

i=1

Pr (M[x

i

) Pr (x

i

)

=

1

k

k

i=1

(1 p

ii

)

= 1

1

k

k

i=1

p

ii

(4)

4

Clearly we need to maximize the sum of the probabilities of correct classication which is the

trace of the misclassication matrix

k

i=1

p

ii

=

k

i=1

_

i

(x) f

i

(x) dx

=

_

k

i=1

i

(x) f

i

(x) dx

_

_

max

i

f

i

(x) dx

This shows that the trace is maximized for the ML rule and therefore Pr (M) is minimized.

5.5 Bayes Rule

The Bayes rule generalizes the ML rule by introducing a set of prior probabilities

i

assumed

known, where

i

= Pr(individual belongs to

i

)

The misclassication probability becomes

Pr (M) =

k

i=1

Pr (M[x

i

) Pr (x

i

)

=

k

i=1

(1 p

ii

)

i

= 1

k

i=1

p

ii

i

The previous analysis carries across as follows:

k

i=1

p

ii

i

=

k

i=1

i

_

i

(x) f

i

(x) dx

=

_

k

i=1

i

(x) f

i

(x) dx

_

_

max

i

i

f

i

(x) dx

The Bayes rule assigns x to

i

that maximizes the posterior probability p (j[x)

j

f

j

(x) :

This rule also minimizes the probability of misclassication Pr (M)

5.6 Minimizing Expected Loss

We can also introduce unequal costs of misclassication.

Let c

ij

= c (i[j) be the cost of assigning individual x

j

to

i

:

Generally we suppose c

ii

= 0:

Denition

5

The expected cost of misclassication is known as the Bayes risk:

R

i

(x) =

k

j=1

c (i[j) p (j[x)

is the risk or expected loss conditional on x and taking action i, where

p (j[x) =

j

f

j

(x)

f (x)

and f (x) =

k

j=1

j

f

j

(x)

Denition

The overall risk of a rule dened by is the expected loss at x

R(x) =

k

i=1

i

(x) R

i

(x)

We can show that it is optimal to take the action i that minimizes the Bayes risk.

ER(x) =

_

_

k

i=1

i

(x) R

i

(x)

_

f (x) dx

_

_

k

i=1

_

min

i

R

i

(x)

_

f (x) dx

It is optimal to minimize the overall expected loss to minimize the Bayes risk.

Example

Given two populations

1

;

2

; suppose c (2[1) = 5 and c (1[2) = 10:

Suppose that 20% of the population belong to

2

; then

1

= 0:8 and

2

= 0:2

Given a new individual x; the Bayes risk of assigning x to

1

is

R

1

(x) =

k

j=1

c (1[j) p (j[x)

= 10 0:2 f

2

(x)

= 2f

2

(x)

The Bayes risk of assigning x to

2

is

R

2

(x) =

k

j=1

c (2[j) p (j[x)

= 5 0:8 f

1

(x)

= 4f

1

(x)

Suppose that a new individual x

0

gives f

1

(x

0

) = 0:3 and f

2

(x

0

) = 0:4; then

2 0:4 = 0:8 < 4 0:3 = 1:2

so we assign x

0

to

1

:

6

Potrebbero piacerti anche

- Lesson 6 Prob DistributionsDocumento17 pagineLesson 6 Prob DistributionsAmirul SyafiqNessuna valutazione finora

- Random Variables IncompleteDocumento10 pagineRandom Variables IncompleteHamza NiazNessuna valutazione finora

- Financial Engineering & Risk Management: Review of Basic ProbabilityDocumento46 pagineFinancial Engineering & Risk Management: Review of Basic Probabilityshanky1124Nessuna valutazione finora

- Random Signals: 1 Kolmogorov's Axiomatic Definition of ProbabilityDocumento14 pagineRandom Signals: 1 Kolmogorov's Axiomatic Definition of ProbabilitySeham RaheelNessuna valutazione finora

- Risk FisherDocumento39 pagineRisk Fisherbetho1992Nessuna valutazione finora

- 3 Discrete Random VariablesDocumento8 pagine3 Discrete Random VariablesHanzlah NaseerNessuna valutazione finora

- Assign 1Documento5 pagineAssign 1darkmanhiNessuna valutazione finora

- Order StatisticsDocumento19 pagineOrder Statisticsmariammaged981Nessuna valutazione finora

- Class6 Prep ADocumento7 pagineClass6 Prep AMariaTintashNessuna valutazione finora

- Multivariate Normal Distribution: 3.1 Basic PropertiesDocumento13 pagineMultivariate Normal Distribution: 3.1 Basic PropertiesGeorge WangNessuna valutazione finora

- Discrete Probability Distribution Chapter3Documento47 pagineDiscrete Probability Distribution Chapter3TARA NATH POUDELNessuna valutazione finora

- Stochastic ProcessesDocumento46 pagineStochastic ProcessesforasepNessuna valutazione finora

- CH 3Documento22 pagineCH 3Agam Reddy MNessuna valutazione finora

- Statistics HandoutDocumento15 pagineStatistics HandoutAnish GargNessuna valutazione finora

- Introduction Statistics Imperial College LondonDocumento474 pagineIntroduction Statistics Imperial College Londoncmtinv50% (2)

- Prob Distribution 1Documento86 pagineProb Distribution 1karan79Nessuna valutazione finora

- 3 ES Discrete Random VariablesDocumento8 pagine3 ES Discrete Random VariablesM WaleedNessuna valutazione finora

- Discrete Probability DistributionDocumento5 pagineDiscrete Probability DistributionAlaa FaroukNessuna valutazione finora

- BasicsDocumento61 pagineBasicsmaxNessuna valutazione finora

- Review of Random VariablesDocumento8 pagineReview of Random Variableselifeet123Nessuna valutazione finora

- ANG2ed 3 RDocumento135 pagineANG2ed 3 Rbenieo96Nessuna valutazione finora

- Introduction To Probability DistributionsDocumento113 pagineIntroduction To Probability DistributionsMadhu MaddyNessuna valutazione finora

- Stats Cheat SheetsDocumento15 pagineStats Cheat SheetsClaudia YangNessuna valutazione finora

- Random Variable & Probability Distribution: Third WeekDocumento51 pagineRandom Variable & Probability Distribution: Third WeekBrigitta AngelinaNessuna valutazione finora

- Chapter 3 Mathematical Expectation Distribution Theory 2Documento1 paginaChapter 3 Mathematical Expectation Distribution Theory 2juanNessuna valutazione finora

- Week 1.: "All Models Are Wrong, But Some Are Useful" - George BoxDocumento7 pagineWeek 1.: "All Models Are Wrong, But Some Are Useful" - George BoxjozsefNessuna valutazione finora

- 1 Review and Overview: CS229T/STATS231: Statistical Learning TheoryDocumento4 pagine1 Review and Overview: CS229T/STATS231: Statistical Learning TheoryyojamaNessuna valutazione finora

- Notes and Solutions For: Pattern Recognition by Sergios Theodoridis and Konstantinos Koutroumbas.Documento209 pagineNotes and Solutions For: Pattern Recognition by Sergios Theodoridis and Konstantinos Koutroumbas.Mehran Salehi100% (1)

- Review Some Basic Statistical Concepts: TopicDocumento55 pagineReview Some Basic Statistical Concepts: TopicMaitrayaNessuna valutazione finora

- Point Estimation: Definition of EstimatorsDocumento8 paginePoint Estimation: Definition of EstimatorsUshnish MisraNessuna valutazione finora

- Multivariate Normal Distribution: 3.1 Basic PropertiesDocumento13 pagineMultivariate Normal Distribution: 3.1 Basic PropertiesApam BenjaminNessuna valutazione finora

- Unit 1 - 03Documento10 pagineUnit 1 - 03Raja RamachandranNessuna valutazione finora

- 3 Discrete Random Variables and Probability DistributionsDocumento22 pagine3 Discrete Random Variables and Probability DistributionsTayyab ZafarNessuna valutazione finora

- Module 2 (Updated)Documento71 pagineModule 2 (Updated)Kassy MondidoNessuna valutazione finora

- PROBABILITYDocumento127 paginePROBABILITYAngel Borbon GabaldonNessuna valutazione finora

- Multi Varia Da 1Documento59 pagineMulti Varia Da 1pereiraomarNessuna valutazione finora

- Chapter 4Documento36 pagineChapter 4Sumedh KakdeNessuna valutazione finora

- A Very Gentle Note On The Construction of DP ZhangDocumento15 pagineA Very Gentle Note On The Construction of DP ZhangronalduckNessuna valutazione finora

- BS UNIT 2 Note # 3Documento7 pagineBS UNIT 2 Note # 3Sherona ReidNessuna valutazione finora

- Likelihood, Bayesian, and Decision TheoryDocumento50 pagineLikelihood, Bayesian, and Decision TheorymuralidharanNessuna valutazione finora

- RV Prob DakamistributionsDocumento43 pagineRV Prob DakamistributionsMuhammad IkmalNessuna valutazione finora

- Fisher Information and Cram Er-Rao Bound: Lecturer: Songfeng ZhengDocumento12 pagineFisher Information and Cram Er-Rao Bound: Lecturer: Songfeng Zhengprobability2Nessuna valutazione finora

- Random Variables PDFDocumento64 pagineRandom Variables PDFdeelipNessuna valutazione finora

- Chapter 4Documento21 pagineChapter 4Anonymous szIAUJ2rQ080% (5)

- Crib Sheet For Exam #1 Statistics 211 1 Chapter 1: Descriptive StatisticsDocumento5 pagineCrib Sheet For Exam #1 Statistics 211 1 Chapter 1: Descriptive StatisticsVolodymyr ZavidovychNessuna valutazione finora

- Probability DistributionsDocumento85 pagineProbability DistributionsEduardo JfoxmixNessuna valutazione finora

- Data Mining: By: Muhammad HaleemDocumento36 pagineData Mining: By: Muhammad HaleemSultan Masood NawabzadaNessuna valutazione finora

- Distribuciones de ProbabilidadesDocumento10 pagineDistribuciones de ProbabilidadesPatricio Antonio VegaNessuna valutazione finora

- EXAM1 Practise MTH2222Documento4 pagineEXAM1 Practise MTH2222rachelstirlingNessuna valutazione finora

- Multivariate Distributions: Why Random Vectors?Documento14 pagineMultivariate Distributions: Why Random Vectors?Michael GarciaNessuna valutazione finora

- Probability Theory (MATHIAS LOWE)Documento69 pagineProbability Theory (MATHIAS LOWE)Vladimir MorenoNessuna valutazione finora

- Chapter 3Documento39 pagineChapter 3api-3729261Nessuna valutazione finora

- ENGDAT1 Module3 PDFDocumento35 pagineENGDAT1 Module3 PDFLawrence BelloNessuna valutazione finora

- OptimalLinearFilters PDFDocumento107 pagineOptimalLinearFilters PDFAbdalmoedAlaiashyNessuna valutazione finora

- Random VariablesDocumento21 pagineRandom VariablesKishore GNessuna valutazione finora

- 3.1 Binary ClassificationDocumento4 pagine3.1 Binary ClassificationNCT DreamNessuna valutazione finora

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Da EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Nessuna valutazione finora

- Mathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsDa EverandMathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsNessuna valutazione finora

- Prospects in Mathematics. (AM-70), Volume 70Da EverandProspects in Mathematics. (AM-70), Volume 70Nessuna valutazione finora

- Distance-Based TechniquesDocumento7 pagineDistance-Based TechniquesGeorge WangNessuna valutazione finora

- Hypothesis Testing (Hotelling's T - Statistic) : 4.1 The Union-Intersection PrincipleDocumento19 pagineHypothesis Testing (Hotelling's T - Statistic) : 4.1 The Union-Intersection PrincipleGeorge WangNessuna valutazione finora

- Multivariate Normal Distribution: 3.1 Basic PropertiesDocumento13 pagineMultivariate Normal Distribution: 3.1 Basic PropertiesGeorge WangNessuna valutazione finora

- Principal Components Analysis (PCA) : 2.1 Outline of TechniqueDocumento21 paginePrincipal Components Analysis (PCA) : 2.1 Outline of TechniqueGeorge WangNessuna valutazione finora

- MVA Section1 2012Documento14 pagineMVA Section1 2012George WangNessuna valutazione finora

- Evermotion Archmodels PDFDocumento2 pagineEvermotion Archmodels PDFLadonnaNessuna valutazione finora

- Introduction To The Open Systems Interconnect Model (OSI)Documento10 pagineIntroduction To The Open Systems Interconnect Model (OSI)Ajay Kumar SinghNessuna valutazione finora

- Loganathan Profile - May 2016Documento2 pagineLoganathan Profile - May 2016dlogumorappurNessuna valutazione finora

- Lecture - 2: Instructor: Dr. Arabin Kumar DeyDocumento4 pagineLecture - 2: Instructor: Dr. Arabin Kumar Deyamanmatharu22Nessuna valutazione finora

- Jit, Jimma University: Computer Aided Engineering AssignmentDocumento8 pagineJit, Jimma University: Computer Aided Engineering AssignmentGooftilaaAniJiraachuunkooYesusiinNessuna valutazione finora

- Milling ProgramDocumento20 pagineMilling ProgramSudeep Kumar SinghNessuna valutazione finora

- GTD - Workflow PDFDocumento1 paginaGTD - Workflow PDFNaswa ArviedaNessuna valutazione finora

- 26 - B - SC - Computer Science Syllabus (2017-18)Documento32 pagine26 - B - SC - Computer Science Syllabus (2017-18)RAJESHNessuna valutazione finora

- Key Windows 8 RTM Mak KeysDocumento3 pagineKey Windows 8 RTM Mak KeysNavjot Singh NarulaNessuna valutazione finora

- Thesis 2 Sugar IntroductionDocumento4 pagineThesis 2 Sugar IntroductionKyroNessuna valutazione finora

- A Study On The Recent Technology Development in Banking IndustryDocumento6 pagineA Study On The Recent Technology Development in Banking IndustryPriyanka BaluNessuna valutazione finora

- BPR Reengineering Processes-CharacteristicsDocumento16 pagineBPR Reengineering Processes-Characteristicsamitrao1983Nessuna valutazione finora

- Past Papers .Documento4 paginePast Papers .Tariq MahmoodNessuna valutazione finora

- Exam Questions 2018Documento3 pagineExam Questions 2018naveen g cNessuna valutazione finora

- Infineon TLE9832 DS v01 01 enDocumento94 pagineInfineon TLE9832 DS v01 01 enBabei Ionut-MihaiNessuna valutazione finora

- 3300icp THB MCD4.0Documento395 pagine3300icp THB MCD4.0royalsinghNessuna valutazione finora

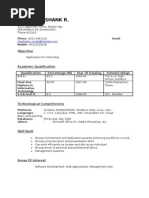

- Singh Shashank R.Documento3 pagineSingh Shashank R.shashankrsinghNessuna valutazione finora

- Currency Recognition Blind Walking StickDocumento3 pagineCurrency Recognition Blind Walking StickIJIRSTNessuna valutazione finora

- Introduction To MicroprocessorDocumento31 pagineIntroduction To MicroprocessorMuhammad DawoodNessuna valutazione finora

- IBM DS3200 System Storage PDFDocumento144 pagineIBM DS3200 System Storage PDFelbaronrojo2008Nessuna valutazione finora

- 9788131526361Documento1 pagina9788131526361Rashmi AnandNessuna valutazione finora

- Studocu Is Not Sponsored or Endorsed by Any College or UniversityDocumento51 pagineStudocu Is Not Sponsored or Endorsed by Any College or UniversityDK SHARMANessuna valutazione finora

- MCQ Book PDFDocumento104 pagineMCQ Book PDFGoldi SinghNessuna valutazione finora

- Artificial Intelligence A Z Learn How To Build An AI 2Documento33 pagineArtificial Intelligence A Z Learn How To Build An AI 2Suresh Naidu100% (1)

- LaoJee 66341253 - Senior AccountantDocumento5 pagineLaoJee 66341253 - Senior AccountantSaleem SiddiqiNessuna valutazione finora

- Production Resources ToolsDocumento2 pagineProduction Resources ToolsRAMAKRISHNA.GNessuna valutazione finora

- UVM Harness WhitepaperDocumento12 pagineUVM Harness WhitepaperSujith Paul VargheseNessuna valutazione finora

- FastRawViewer Manual PDFDocumento220 pagineFastRawViewer Manual PDFElena PăunNessuna valutazione finora

- Jenis-Jenis Profesi ITDocumento1 paginaJenis-Jenis Profesi ITAriSutaNessuna valutazione finora

- Manual WECON PLC EDITOR PDFDocumento375 pagineManual WECON PLC EDITOR PDFChristian JacoboNessuna valutazione finora