Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Eps513finalpaper 1

Caricato da

api-253587446Titolo originale

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Eps513finalpaper 1

Caricato da

api-253587446Copyright:

Formati disponibili

1

Bethany Crocker

December 6, 2013

EPS 513

Final Paper

I chose to use data from our report card assessment that we give our students. This

assessment is made by my mentor teacher. It is very similar to the STEP assessment that we

also give periodically. This assessment tests the students letter and sound recognition skills,

oral rhyming and reading skills (both decoding and comprehension). We also assess math and

writing skills. We give a similar assessment before every progress report and report card. This

ensures that we have accurate data of our students progress.

On the reading portion of the assessment, we check that the students can point with

one-to-one correspondence, distinguish between words and letters, and that they follow words

from left to right. We also ask them basic comprehension questions, What happens in this

story?, ask them to identify the characters in the book, as well as name the main character in

the book. All of the students were assessed with the same story, Goldilocks and the Three

Bears. The teacher who administered the assessment read the story aloud and asked the

students to point to words as the teacher read them. At the end of the story, the teacher asked

the student, What happened in this story? If the student was able to say something that

actually happened in the story, they were given a score of Well Developed. If the student

mentioned one thing that happened, and one thing that did not happen, they were given a score

of Partially Developed. If the student could not name anything that happened in the story or

said something that did not happen in the story, they were given a score of Beginning.

The students were also asked to name all of the characters in the story. If the students

said the three bears and goldilocks they received a score of well developed, if they only

named the bears or goldilocks they received a score of partially developed and if they did not

name any of the characters they scored a beginning. For the main character question, the

2

students should have responded with Goldilocks. If they named Goldilocks they received

well developed. If they named the bears they received partially developed and if they did not

name a character they received a score of beginning.

This assessment was given by three different teachers: my mentor, my co-resident and

myself. We were all supposed to ask the same questions and not give any prompting, so the

test should have reliable results. However, there is no way of knowing if we did ask in a different

manner because we did not observe one another administering the assessment. The test

answers are valid because they were recorded in the same way and assessed a skill by asking

questions that are understandable and rely on students to recall information from a text that is

read aloud to them. This test assesses important skills that are part of common core standards

for kindergarten students. If students are able to answer comprehension questions about a text,

it means they are on the right track to answering more complex questions as they progress

throughout the school year. This shows the consequential validity of this assessment. It may be

argued that this assessment could be even more reliable and valid. Perhaps with only one

teacher assessing students and more questions asked of the students for the comprehension

portion of the assessment this assessment may result in more accurate data. However, for the

purposes of data collection in our classroom, because of the consistency of these assessments

and alignment to common core standards, I feel that this data accurately reflects student

understanding.

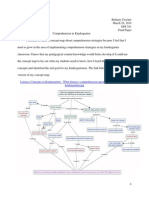

The results of the comprehension portion of the Report Card Assessment showed that

all of our students were able to recall some details from the story. Out of 21 students, 16

students scored well developed when identifying characters. Three students scored partially

developed and two students scored beginning. On the identifying main character part of the

test, 17 students scored a well developed, one student scored, partially developed and three

students scored, beginning. This graph shows the results of this assessment specifically in the

comprehension portion of the report card assessment.

3

I chose to focus on two students for this reflection. These are students that I also

focused on when collecting observation data for the previous assignment. On this Report Card

Assessment, A.K (a five-year-old girl) scored well developed on all the comprehension

questions. K.B. (a five-year-old boy) also scored well developed on the comprehension

questions for this assessment.

One thing I noticed through the observation collection assignment was that these

students did not show persistence when answering comprehension questions as a whole group

on the rug during most of the read aloud I observed. When observing A.K. during a read aloud

lesson in which the students were asked comprehension questions about the main idea, A.K.

did not raise her hand during the second portion of the lesson. Also, A.K. was not able to show

that she understood the main idea of the story throughout the entire lesson.

K.B. was eager to participate during most of the comprehension lesson, but did get

distracted toward the end. His answer to the main idea question during his think, pair, share

4

activity was not accurate. He did raise his hands more often at the beginning of the lesson. He

collaborated well with others during the think, pair, share portion of the assignment.

This particular observation showed that both K.B. and A.K lacked persistence when

seated on a rug for a half hour period of time. K.B. raised his hands more at the beginning than

toward the end, which suggests that he is eager to participate, but lacks persistence in certain

academic situations. If lack of persistence during lessons continues for these students during

lessons on the rug, it could affect their academic performance, especially when it comes to

comprehending read aloud assignments. There will need to be a change of teaching strategies

implemented on the rug that allow students to engage in more discussion. The amount of

persistence in the lesson was higher for these students and all students in the classroom when

they were allowed to engage in more discussion.

Of course, this lesson was not the only lesson in which I have observed K.B. and A.K.

participate. In other read aloud lessons, I have noticed that they actively participate during the

entire rug time, especially when given chances to use gestures, teacher talk is limited, when

positive narration is used frequently to redirect attention to the lesson, and when rug time is cut

down to 10-15 minutes instead of thirty.

K.B. and A.K. scored well on the comprehension portion of their report card assessment,

but when tracking observation data during a whole group reading lesson in which

comprehension skills were practiced, the students showed a lack of persistence and a lack of

knowledge about the comprehension skill. I have also gathered a few more pieces of

assessment data in order to analyze the knowledge, skills and learning strategies of these

students in the comprehension domain of language arts.

K.B. was given a running record assessment about three weeks after this observation

data was collected. When asked to recall details from the story, he was able to accurately recall

several things that happened in the story. While this did not assess the same comprehension

5

skill as the observation data was was collected (main idea), it does suggest that K.B. does have

the ability to focus on a story and answer comprehension questions accurately.

Data was collected during language arts small groups for A.K. During the months of

October and November, A.K. showed persistence and accuracy during every lesson. Data was

recorded five times from October 17th to November 7th. She was able to meet the objective of

every lesson during the small group activity. Although guided reading groups had not started yet

and these lessons were not assessing comprehension skills, she did show that she was very

persistent when participating in the learning activity. She was focused during the entire fifteen

minute phonics lesson. She also very accurately showed understanding of the concepts tested.

I know that my students are all able to recall details about a text, based on the data from

the report card assessment. Most of my students (16/21 and 17/21) are able to recall even more

specific details about the text, identification of the main character. This also requires knowledge

of the term main character, which was taught for an entire week during the read aloud block of

literacy before these report card assessments were given. After observing a read aloud lesson

in which students were required to identify the main idea of the text, almost all of the students

were unable to accurately identify the main idea during this lesson. The two students I have

focused in on have also followed this trend. During the assessment, they answered

comprehension skills accurately, and during the rug time they were unable to answer the

comprehension question. In Driven By Data by Paul Brambrick-Santoyo, he mentions the eight

mistakes that matter when trying to effectively implement data driven instruction. I think that in

this case, my data does show a curriculum-assessment disconnect. Although the students may

be tested on a report card assessment in the future on main idea, the assessment given for the

first quarter did not cover main idea, although main idea was a part of the literacy curriculum for

the first quarter.

We have a variety of assessments in our classroom. These include interim

assessments, exit tickets, and checklists. In order to get an accurate picture of my students

6

comprehension skills, I will need to carefully check every piece of data that I have which

pertains to comprehension. I think that the mistakes of ineffective follow-up and not making

time for data are important not to avoid, as mentioned in our Driven By Data text (p. xxvi). It is

important that I follow up on the skills students are not getting by accurately collecting a variety

of data, which is happening in our classroom, but then also following up with the appropriate

assessments and perhaps re-teaching the concept if necessary.

I know that my students can recall details and name main characters in text, but they

have difficulty identifying the main idea of non-fiction texts. Do my students need more time with

non-fiction texts? Are we spending too much time asking lower level recalling questions and not

asking students to generalize about texts enough? Do my students need less time on the rug or

more time to discuss on the rug (less teacher talk)? It seems that they are more successful with

meeting the objectives for a comprehension lesson when given more time to talk and actively

participate in the lesson. Small group activities seem especially engaging for my kindergarten

students.

Thinking about student motivation is an important part of assessment, as we learned

throughout Lorrie Shepards article, The Role of Assessment in a Learning Culture. As we think

about creating classrooms that foster learning so that students are excited and motivated to

learn, we must also accurately record data in order to inform our instruction. This is why

observation data about student behavior is also so impactful when creating lesson plans that

are appropriate and have the students best interest in mind.

As I think about both formal and informal assessments for monitoring my students

progress in the area of reading comprehension, I think about a variety of ways to collect data. I

will need to use checklists when students are on the rug answering questions and participating

in think, pair, share discussions because I can then use that data to inform my instruction. I

have not been accurately recording data when students participate in oral discussion, especially

during rug time.

7

I will continue to use exit tickets on comprehension skills that ask students to represent

their understanding of the skill in a variety of ways to gage whether or not the students are

understanding. For my students, this is mostly represented by circling pictures, drawing pictures

and sometimes attempting to write a sentence about the topic. However, writing is not a very

valid assessment yet because unless I go around the room to ask every student what they

mean to write, I will not be able to read student answers and record accurate data. Sometimes,

because there are three teachers in our room, we are able to write what all the students mean

on their papers. This data would be reliable to use.

Because I have noticed that my students work well in a small group setting, I will

continue to practice comprehension skills as we read guided reading books. Each teacher takes

notes on the students understanding of the comprehension skill during the particular lesson. I

will continue to ask higher level thinking questions, not just recalling questions. Also, this is an

important time to review concepts learned in our whole group lesson.

Last, we will continue to use interim assessments such as STEP, which assess students

on comprehension skills, but not necessarily the ones that we have focused on during that

quarter. It is important that students are introduced to a wide variety of comprehension skills.

We will go over all of the skills learned in the first and second quarter in the spring. It would be

beneficial, however, make sure that the progress report and report card assessments accurately

reflect the skills practiced in that quarter. Adding on more comprehension questions that target

all of the comprehension skills practiced would provide more data that could inform our

instruction in the future.

I will continue to take observation notes on students because they provide more data

about student motivation and persistence during lessons. This will help me change my lessons

to engage more students. I can also reflect on my questioning techniques and teacher/student

talk ratio when I reflect on lessons. I hope to practice different ways of data collection

throughout my residency. As I strive to become a teacher who implements effective, data driven

8

instruction, my hope is that I will continue to grow in planning more effective assessments and

collecting accurate data.

References

Brambrick-Santoyo, P. Driven By Data: A Practical Guide to Improve Instruction. (2010).

Shepard, L. The Role of Assessment in a Learning Culture. Educational Researcher. Vol. 29. No. 7.

(Oct. 2000).

Potrebbero piacerti anche

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDa EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryValutazione: 3.5 su 5 stelle3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Da EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Valutazione: 4.5 su 5 stelle4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDa EverandNever Split the Difference: Negotiating As If Your Life Depended On ItValutazione: 4.5 su 5 stelle4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDa EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaValutazione: 4.5 su 5 stelle4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDa EverandThe Little Book of Hygge: Danish Secrets to Happy LivingValutazione: 3.5 su 5 stelle3.5/5 (399)

- Grit: The Power of Passion and PerseveranceDa EverandGrit: The Power of Passion and PerseveranceValutazione: 4 su 5 stelle4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDa EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyValutazione: 3.5 su 5 stelle3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDa EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeValutazione: 4 su 5 stelle4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnDa EverandTeam of Rivals: The Political Genius of Abraham LincolnValutazione: 4.5 su 5 stelle4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeDa EverandShoe Dog: A Memoir by the Creator of NikeValutazione: 4.5 su 5 stelle4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDa EverandThe Emperor of All Maladies: A Biography of CancerValutazione: 4.5 su 5 stelle4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDa EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreValutazione: 4 su 5 stelle4/5 (1090)

- Her Body and Other Parties: StoriesDa EverandHer Body and Other Parties: StoriesValutazione: 4 su 5 stelle4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDa EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersValutazione: 4.5 su 5 stelle4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDa EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceValutazione: 4 su 5 stelle4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDa EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureValutazione: 4.5 su 5 stelle4.5/5 (474)

- Phil Iri ToolkitDocumento720 paginePhil Iri ToolkitRusty Rjerusalem50% (4)

- The Unwinding: An Inner History of the New AmericaDa EverandThe Unwinding: An Inner History of the New AmericaValutazione: 4 su 5 stelle4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Da EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Valutazione: 4 su 5 stelle4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDa EverandOn Fire: The (Burning) Case for a Green New DealValutazione: 4 su 5 stelle4/5 (73)

- Strategies For Teachers Working With Students With Orthopedic ImpairmentsDocumento4 pagineStrategies For Teachers Working With Students With Orthopedic Impairmentsapi-268598032100% (2)

- MUET SCHEME OF WORK 2019Documento13 pagineMUET SCHEME OF WORK 2019Ameer FaliqueNessuna valutazione finora

- School Library Action Plan Projects/ Programs Objectives Strategies Person/S Responsible Expected OutputDocumento9 pagineSchool Library Action Plan Projects/ Programs Objectives Strategies Person/S Responsible Expected OutputSir Athan Mendoza100% (2)

- Enh 1 Compilation 1 4 BEED 4A Set ADocumento216 pagineEnh 1 Compilation 1 4 BEED 4A Set AKristel Ariane Aguanta100% (1)

- Final Paper 541 1-1Documento7 pagineFinal Paper 541 1-1api-253587446Nessuna valutazione finora

- Math Additionandsubtractionstoryproblems Crocker 2 25-28 14Documento11 pagineMath Additionandsubtractionstoryproblems Crocker 2 25-28 14api-253587446Nessuna valutazione finora

- Writing Guidedwriting Crocker 9 10 13Documento3 pagineWriting Guidedwriting Crocker 9 10 13api-253587446Nessuna valutazione finora

- Sharedreading Decodabletext Crocker 3 18 14Documento4 pagineSharedreading Decodabletext Crocker 3 18 14api-253587446Nessuna valutazione finora

- Phonemicawareness Smallgrouplearningletternames Crocker 9 23 13Documento2 paginePhonemicawareness Smallgrouplearningletternames Crocker 9 23 13api-253587446Nessuna valutazione finora

- Literacy Sharedreading Crocker 9 19 13Documento3 pagineLiteracy Sharedreading Crocker 9 19 13api-253587446Nessuna valutazione finora

- Eps 513 Formative Assessment Final Paper-1Documento10 pagineEps 513 Formative Assessment Final Paper-1api-253587446Nessuna valutazione finora

- Finalpaper 1Documento5 pagineFinalpaper 1api-253587446Nessuna valutazione finora

- Crockerbethanydeneen 1Documento3 pagineCrockerbethanydeneen 1api-253587446Nessuna valutazione finora

- SelfassessmentDocumento1 paginaSelfassessmentapi-253587446Nessuna valutazione finora

- Fieldworkreport-Crocker 2Documento5 pagineFieldworkreport-Crocker 2api-253587446Nessuna valutazione finora

- Crocker Sharedreading Poem 4 8 14Documento5 pagineCrocker Sharedreading Poem 4 8 14api-253587446Nessuna valutazione finora

- Teaching Values through "WonderDocumento19 pagineTeaching Values through "WonderPaulina GómezNessuna valutazione finora

- English Project ReportDocumento16 pagineEnglish Project Reportlolanpp27Nessuna valutazione finora

- Essential English Teaching SkillsDocumento30 pagineEssential English Teaching Skillstomasjamba911Nessuna valutazione finora

- Analyzing Sound DevicesDocumento5 pagineAnalyzing Sound DevicesAngelica Apostol GonzalesNessuna valutazione finora

- Nadia The Willful Lesson Plan FinalDocumento1 paginaNadia The Willful Lesson Plan Finalapi-159128275Nessuna valutazione finora

- "Tommy Orange Gives Voice To Urban Native Americans" by Marlena GatesDocumento19 pagine"Tommy Orange Gives Voice To Urban Native Americans" by Marlena Gatesapi-536978138Nessuna valutazione finora

- Learning Action Cells (Lac) Refresher: Facilitator'S Session GuideDocumento67 pagineLearning Action Cells (Lac) Refresher: Facilitator'S Session GuideJESSICA MOSCARDONNessuna valutazione finora

- Developing language skills through reading activitiesDocumento2 pagineDeveloping language skills through reading activitiesKay Tracey Aspillaga UrbiztondoNessuna valutazione finora

- CW Q1 Mod1Documento17 pagineCW Q1 Mod1baejasminsalamanNessuna valutazione finora

- Year 5 Daily Lesson Plans: ThursdayDocumento10 pagineYear 5 Daily Lesson Plans: ThursdayDeborah HopkinsNessuna valutazione finora

- Comparing Paragraph PatternsDocumento42 pagineComparing Paragraph PatternskydgecruzNessuna valutazione finora

- Students Perception of A Gamified Reading AssessmentDocumento13 pagineStudents Perception of A Gamified Reading Assessmenttuxedo.usagi.84Nessuna valutazione finora

- Professional EDUCATIONDocumento11 pagineProfessional EDUCATIONEnricoZamoraSantiago100% (1)

- Running Head: Fluency Literature Review 1Documento11 pagineRunning Head: Fluency Literature Review 1rfernzNessuna valutazione finora

- Annotated BibliographyDocumento2 pagineAnnotated BibliographyDelphy VargheseNessuna valutazione finora

- Research Study On K-12 CurriculumDocumento3 pagineResearch Study On K-12 CurriculumKim Rose BorresNessuna valutazione finora

- Content Based InstructionDocumento18 pagineContent Based InstructionMystica BayaniNessuna valutazione finora

- Parent - Student Handbook Middle School 2022-2023 - 5SEP2022Documento52 pagineParent - Student Handbook Middle School 2022-2023 - 5SEP2022Danah Universal School of KuwaitNessuna valutazione finora

- 12 PB 1Documento12 pagine12 PB 1Pramod VermaNessuna valutazione finora

- Literacy Is More Important Now Than in The PastDocumento2 pagineLiteracy Is More Important Now Than in The PastAno ShubladzeNessuna valutazione finora

- 5 W's & 1H A: ReadingDocumento2 pagine5 W's & 1H A: ReadingkasalasNessuna valutazione finora

- GED0001, Specialized English Program 1Documento6 pagineGED0001, Specialized English Program 1Gheoff RicareNessuna valutazione finora

- BP B1. UNIT 1. ExercisesDocumento8 pagineBP B1. UNIT 1. Exerciseskrivkovita992Nessuna valutazione finora

- Assessment Two - Gemma CraigDocumento8 pagineAssessment Two - Gemma Craigapi-458955453Nessuna valutazione finora

- Tutorial Letter 501 (Study Guide)Documento206 pagineTutorial Letter 501 (Study Guide)funkymunkicreationsNessuna valutazione finora