Documenti di Didattica

Documenti di Professioni

Documenti di Cultura

Lecture Notes, Manual

Caricato da

Namwangala Rashid NatinduDescrizione originale:

Copyright

Formati disponibili

Condividi questo documento

Condividi o incorpora il documento

Hai trovato utile questo documento?

Questo contenuto è inappropriato?

Segnala questo documentoCopyright:

Formati disponibili

Lecture Notes, Manual

Caricato da

Namwangala Rashid NatinduCopyright:

Formati disponibili

GRAVITY AND MAGNETICS

IN TODAYS OIL & MINERAL

INDUSTRY

(Updated in 2011)

Course notes prepared by

Professor J. Derek Fairhead

All course notes are copyright protected. These notes are for the sole use of

individuals attending the training course and cannot be reproduced for third parties

in any form without the written approval of Prof. J. D. Fairhead

Prof J Derek Fairhead, GETECH Group plc

Email jdf@getech.com

Web: www.getech.com

GRAVITY AND MAGNETICS

IN TODAYS OIL & MINERAL

INDUSTRY

Copyright

The hard copy materials making up the course notes and the digital pdf

product are copyright protected and cannot be copied or given in any way to

third parties without the written approval of Prof Fairhead

About the Author: J Derek Fairhead

Biography

He did a Joint Honours in Physics and Geology at

Durham University and an MSc and PhD in Geophysics

at Newcastle upon Tyne University on the seismicity of

Africa and the crustal structure of the East African Rift

System based on gravity and magnetic data. He joined the

Department of Earth Sciences, University of Leeds in 1972 as a lecturer in

Geophysics and was promoted to Senior Lecturer and in 1996 to Professor of Applied

Geophysics. He is the founder and Managing Director of GETECH Group plc since

1986. GETECH originally stood for Geophysical Exploration Technology Ltd which

was a spin out company from the University of Leeds in 2000 before successfully

floating on AIM in 2005. GETECH offices are now located at Elmete Hall, Roundhay,

Leeds. GETECH has compiled the worlds largest gravity and magnetic database and

provide a range of services to the oil and mineral industries. These services were

traditionally the provision of data, data processing, data integration and integrated

interpretation studies. Since 2004 GETECH has developed a Petroleum Systems

Evaluation Group (PSEG) headed by internationally recognised geoscientist. This

range of non-seismic services thus provide a set of integrated exploration solutions

enabling the quantitative evaluation of sedimentary basin structure and architecture

and the evolution of its petroleum systems.

His main academic interests lie in Applied Geophysics: improving interpretation

theory, understanding the geological and geophysical controls on sedimentary basin

development within and along the continental margins; and crust/mantle processes

related to rifting and break-up of continents and the influence that plate tectonics has

on continental tectonics. These studies by their very nature require an integrated

approach. In 1999 the SEG honoured him at their annual meeting in Houston for his

services to the oil industry and academia by presenting him with the Special

Commendation Award.

Table of Contents

Title: GRAVITY AND MAGNETICS IN TODAYS OIL & MINERAL

INDUSTRY (Updated 2011)

INTRODUCTION

Section 1 - Introduction

Section 2 - General Properties of Gravity & Magnetic (Potential) Fields

Section 3 Gravity & Magnetic Units and Rock Magnetism

GRAVITY

Section 4 Gravity Anomalies

Section 5 GPS in Gravity Surveys (Land, Marine & Air)

Section 6 Land Gravity Data: Acquisition and Processing

Section 7 Marine Gravity Data: Acquisition & Processing

Section 8 Airborne Gravity Data: Acquisition & Processing

Section 9 Gravity Gradiometer Data

Section 10 Satellite Altimeter Gravity Data: Acquisition & Processing

Section 11 Global Gravity Data & Models

Section 12 Advances in Gravity Survey Resolution

MAGNETICS

Section 13 Magnetic Data: Geomagnetic Field and Time Variations

Section 14 Magnetometers and Satellite & Terrestrial Global Magnetic

Models

Section 15 Aeromagnetic Surveying

MAPPING

Section 16 Geodetic Datums and Map Projections

Section 17 Gridding and Mapping Point and Line Data

DATA ENHANCEMENT

Section 18 Understanding the Shape of Anomalies and classic enhancement

methods to isolate individual anomalies

Section 19 Data Enhancement

INTERPRETATION

Section 20 Interpretation Methodology/Philosophy General Approach

Section 21 Structural (Edge) Mapping: Detection of faults and Contacts

Section 22 Estimating Magnetic Depth: Overview, Problems & Practice

Section 23 Quantitative Interpretation-Manual Magnetic Methods

Section 24 Quantitative: Forward Modelling

Section 25 Quantitative :Semi-Automated Profile Methods

Section 26 - Quantitative -Semi-Automated Grid Methods : Euler

Section 27 Quantitative Semi-Automatic Grid Methods:

Local Phase (or Tilt), Local Wavenumber, Spectral Analysis and

Tilt-depth

APPENDIX

USGS Map Projections

J D Fairhead, Potential Field methods for Oil & Mineral Exploration

GETECH/ Univ. of Leeds:

INTRODUCTION

Section 1 - Introduction

Section 2 - General Properties of Gravity & Magnetic (Potential) Fields

Section 3 Gravity & Magnetic Units and Rock Magnetism

J D Fairhead, Potential Field methods for Oil & Mineral Exploration

GETECH/ Univ. of Leeds:

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 1, Page 1

GETECH/ Univ. of Leeds:

SECTION 1: INTRODUCTION

1.1 Geophysical Methods and their

related Rock Physical Properties

Geophysical exploration methods exploit the fact that as

lithology varies, so do the physical properties of the rock

concerned. The physical properties exploited are as

follows:

Gravity Method : determines the sub-surface spatial

distribution of rock density, , which causes small

changes in the earth's gravitational field strength.

Magnetics Method : determines the sub-surface spatial

distribution of rock magnetisation properties, J, (or

susceptibility and remanence) which cause small

changes in the earth's magnetic (Geomagnetic) field

strength and direction.

Electrical Method : determines the sub-surface spatial

distribution in rock conductivity (=1/resistivity) using

artificial stimulated electrical fields (or varying magnetic

fields ) and measuring their effects.

The above method are POTENTIAL FIELD methods

and give non-unique solutions

Seismic Method : determines the sub-surface spatial

distribution of seismic elastic properties (acoustic

impedance, V). Reflection seismics allow high

resolution images of subsurface structures down to a

certain depth. However this method is very expensive.

Thus the first three methods are rapid methods for

evaluating the existence of sub-surface structures and

help to identify areas for seismic exploration.

Since physical property boundaries do not always

coincide with geological boundaries (chrono-

stratigraphic or lithological), geophysical surveys always

have to be treated with an understanding of what they

can and cannot tell you. Nevertheless, great insights,

obtained no other way (apart from saturation drilling),

can result.

Since it is seldom oil industry practice to log for

susceptibility or to measure remanence, it is normally

necessary to estimate/guess magnetic properties or to

estimate them as part of the interpretation process. The

mineral industry will often log susceptibility.

1.2 Role of Grav/Mag Methods in Oil

Exploration

Gravity and magnetic methods used for:

Pre-seismic stage: Grav/Mag surveys will help to

evaluate depth to basement, structural and basin

configuration mapping, and thus provide major input to

seismic survey design.

During seismic exploration stage: Gravity collected

along and between seismic reflection lines to allow

interpolation/extrapolation of structures between and

away from seismic lines. (Ground magnetic data rarely

collected)

During seismic processing stage: Since gravity

data can be processed rapidly, they can be used to

define structures, particularly faults which can help

seismic processing decisions. Gravity can be used with

seismic data to improve velocity models of the near

surface which will allow better imaging of deeper

structures.

Post-seismic stage: Checks via model studies on

whether seismic interpretation is correct and helps

model deeper parts of sedimentary basins not imaged

by the seismic data.

1.3 Role of Grav/Mag methods in

mineral exploration

Aeromagnetic surveys used to assist in basic

mapping, and to identify target areas for follow up

studies. Since about 2000 airborne gravity surveys are

becoming more common to identify geological

structures and targets. Combined airborne gravity and

magnetics can be a powerful means of differentiating

rock types.

Follow up high resolution aeromagnetic survey or

ground magnetic profiling to identify targets better.

Grav/Mag modelling with drilling to identify size and

subsurface shape of ore body

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 1, Page 2

GETECH/ Univ. of Leeds:

1.4 Basic differences between gravity

and magnetic methods

Gravity and magnetic fields follow very similar physical

laws. Yet the geological information they yield are very

different. This is because their sources are commonly of

quite different geological and physical nature. Rock

density seldom goes outside the range 1.8 to 3.0 g/cc

(often 2.2 to 2.7g/cc) i.e. range less than an order of

magnitude. Whereas magnetisation shows a very large

range up to several orders of magnitude since this is

controlled by the magnetic mineral content (normally

magnetite) within a rock.

Gravity Method: Here the object is to determine the

spatial variation in the acceleration due to gravity (small

g) which depends on the mass (density and volume) of

the rocks underlying the survey area.

The force of attraction F between the gravity meter

mass M and a body of mass m depends on

F = G mM/r

2

where G is the Gravitational constant and r is distance

between masses m

and M

since F = Mg then g = G m/r

2

g is proportional to mass m and proportional to

density

Density is a scalar quantity (has only magnitude, not

direction). This makes the shape of gravity anomalies

simpler and generally easier to interpret than magnetic

anomalies. Density boundaries tend to be associated

with: porosity changes, faults, unconformities, basin

edges or basin floor, limestones, dolomite or evaporite

occurrences, salt occurrences and major lithologic

boundaries

Magnetic Method. Here the object is to determine the

spatial variation of the geomagnetic field within the

survey area and use these magnetic field variations to

say something about the geometry, depth and magnetic

properties of subsurface rock structures. The

magnetisation of rocks has both direction and

magnitude (thus magnetisation is a vector quantity) and

can be a combination of both Remanent and Induced

magnetisation. The induced magnetisation depends on

the rocks susceptibility while the remanent

magnetisation (remanence) depends on the history of

the rock.

These factors tend to make the shape of the magnetic

anomalies complex and in general more difficult to

interpret than gravity anomalies.

1.5 Scaling Properties of Gravity and

Magnetic Data

Gravity Field: Due to its monopole source nature, the

amplitude of g is proportional to scale change. That is, if

a structure is double the size of another (or the density

contrast doubles) then the gravity effects will also

double. This can be viewed as a doubling of the mass.

Thus gravity maps are therefore often dominated by the

gravitational effects of large regional density structures

and the gravity effects due to shallow small scale

structures, that are of interest, may only represent a

small percentage of the gravity signal (often less than

10%).

Magnetic Field: due to the dipole source nature, the

magnetic field scales differently. The amplitude of a

magnetic anomaly is unaffected by physical scale

change. This in part is due to the magnetic effect not

arising from the bulk volume of the magnetic material

but from the surface area of the magnetic interface and

that magnetic fields decay more rapidly with distance.

This causes magnetic maps to appear to favour the

effects of shallow sources over deep ones. This can be

a problem when volcanics (generally strongly magnetic)

occur within the sedimentary section of a basin, since

their magnetic signal will tend to dominate and make it

difficult or impossible to determine depth of the basin

from the magnetic signal arising from the crystalline

basement interface. If no shallow volcanics are present

the effects of the crystalline basement can usually be

seen in magnetic maps.

Figure 1/1 illustrates scaling effect over a simple 2D

body.

Figure 1/1 Scaling relations. Solid anomaly lines

relate to effects of larger body and the dashed lines

the effect of the smaller body

1.6 Lateral Density and Magnetisation

contrasts

Lateral rather than vertical density contrasts cause

gravity anomalies. The magnetostatic charge of the

body will control the magnetic anomaly (see fig 1/2).

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 1, Page 3

GETECH/ Univ. of Leeds:

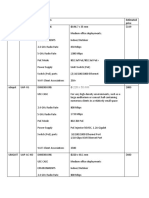

Table Gravity vs Magnetic Effects

Property Gravity Magnetic field

Anomaly detection Vertical component of Components along local field

direction

Local anomalous gravity of local anomalous flux density

Means of measurement Spring balance Atomic/Nuclear effects

Source type Monopole Dipole

Scaling Increases with scale Independent of scale

Body physical property Mass Magnetic dipole moment

Rock physical property Density i.e. mass per unit Magnetisation (induced or remanent)

volume i.e. dipole moment per unit volume

Range of physical property rocks are 1.8 to 3.0 g/cc 0 to 40 A/m (X=0 to 1SI)

Often 2.0 to 2.8g/cc ( up to 5 orders of magnitude)

(less than order of magnitude)

Lateral changes Gradual with well defined Chaotic in basement rocks

Boundaries(faults, contacts) but can be similar to grav

Low values Water(1.0 g/cc or less for ice Sediments

Or up to 1.03g/cc for sea water

Halite, unconsolidated or porous

Sediments

Mid values Shales Acid igneous and

metamorphic rocks

High Values consolidated sediments, Basic igneous and metamorphic

rocks,

Carbonates, anhydrite, iron ore, banded Iron formation

igneous rocks

Geological information Faults, basin location, Extrusive and igneous rocks. Depth

and

provided sediment thickness, porosity structure of metamorphic

basement

Structure of sediments(HRA)

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 1, Page 4

GETECH/ Univ. of Leeds:

Figure 1/2 Lateral density and susceptibility

contrasts cause anomalies.

For magnetic anomalies, the anomaly response is more

complex than just lateral changes in susceptibility since

magnetisation is a vector and we need to consider

magnetic flux and magnetostatic charge. The magnetic

response of a geological structure depends on the

orientation of the body with respect to the inducing field

and the top surface of a structure often contributes

significantly to the shape and size of a magnetic

anomaly. (see Section 18 for understanding the shape

of magnetic anomalies).

1.7 Geological Context

Rocks tend to be more uniform in their density than in

their magnetisation. Different rock types tend to have

different densities and magnetisations.

In General

High density(3.0g/cc) ........................>. Low

(1.8g/cc)

Ultra basics >Basic>Metamorphic > Acid Intrusive > Sediments

Strong magnetisation

. ..............

>Weak

magnetisation

General trend in density and magnetisation properties

of rocks is similar with high density rocks (e.g. Gabbro)

having strong magnetisation and low density rocks (e.g.

sediments) having weak magnetisation. Thus it is to be

expected that gravity and magnetic anomaly maps will

show some degree of correlation.

1.8 Reference Books

M. B. Dobrin Introduction to Geophysical Prospecting

McGraw-Hill

J. Milsom Field Geophysics Geol Soc. of London

D. S. Parasnis Principles of Applied Geophysics

Chapman and Hall

G.D. Garland The Earth's shape and Gravity

Pergamon Press

C. Tsuboi Gravity George Allen and Unwin

W. Torge Gravimetry Walter de Gruyter

W. M. Telford et al. Applied Geophysics. Cambridge

University Press

Grant and West 1965 Interpretation theory in applied

geophysics McGraw Hill

Richard J Blakely 1995 Potential Theory in Gravity

and Magnetics Applications Cambridge Univ. Press

AGSO Journal of Australian Geology & Geophysics

Vol. 17, No 2 1997 Thematic Issue: Airborne magnetic

and radiometric surveys Ed P. Gunn

SEG No 8/AAPG, #43 1998 Geology Applications of

Gravity and Magnetics: Case Histories Ed R I Gibson

and P S Millegan

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 2, Page 1

GETECH/ Univ. of Leeds:

SECTION 2: GENERAL PROPERTIES OF GRAVITY

& MAGNETIC (POTENTIAL) FIELDS

2.1 General Properties

Potential field theory can be applied to a broad class of

force fields in which no dissipative losses of energy

occur when a body moves from one point to another.

Such fields are conservative and the potential energy of

the body depends only on its position and not on the

path along which the body moved.

For simplicity let us only consider the gravity field.

Field of a monopole (Gravity): The force between two

monopolies of strength (mass) m

1

& m

2

situated at

distance r apart is

Figure 2/1: Field of a monopole (Gravity)

F = Gm

1

m

2

/r

2

let m

1

go to 1 and m

2

go to m

The unit pole moves distance dr against field of m

External work dU = -Fdr (force x distance)

where U = Potential Energy

dU/dr = -F = -Gm/r

2

Integrating

U = Gm/r + constant

To make constant = 0 we define work done in bringing

the unit pole from infinity to the point i.e. when m is at

infinity with respect to unit mass

U = O, so constant = O

So Potential Energy U = Gm/r

In vector notation F = grad U = dU/dr =VU

where grad is short for gradient

dU/dr = F

= Fx + Fy + Fz

= i

dU

dx

j

dU

dy

k

dU

dz

|

\

|

.

|

|

\

|

.

|

|

\

|

.

|

+ +

Where i, j & k are unit vectors in the x, y & z directions

Question 1: Does the gravitational force field of a

mass interact with the gravitational force field of

another mass?

Figure 2/2: Hall Effect

If you apply current and magnetic fields in perpendicular

directions through a material it is possible to generate a

force field in the third perpendicular field. Thus I & F

force fields have interacted giving rise to a voltage

potential in the mutually perpendicular direction. So do

two gravitational force fields interact to give rise to a

new vector field?

To answer this one needs to solve for Curl F

= + +

|

\

|

.

|

|

\

|

.

|

|

\

|

.

| i

dF

z

dy

dF

y

dz

j

dF

x

dz

dF

z

dx

k

dF

y

dx

dF

x

dy

For Potential Field such as Grav. and Mag. Curl F = 0

Answer There is no interaction between gravity force

fields. Therefore gravity force is continuous function of

space co-ordinates

Question 2: How does the gravity force field diverge

or change with distance?

Need to evaluate the Divergence (Div)

Figure 2/3: Divergence of force field

Consider force in terms of lines of force (flux)

we know F is proportional to 1/r

2

At distance r surface enclosing mass m has area is

proportional to r

2

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 2, Page 2

GETECH/ Univ. of Leeds:

force = No. of lines/unit area

So it is possible to calculate the Divergence of the force

field (cF/ cr) in terms of Surface or Volume Integrals.

i) Consider surface S encloses no attracting matter

Figure 2/4: Surface enclosing no attracting matter

div

v

Fdv F

n

ds

s

} }

= = 0

F

n

= force normal to surface s

v

F

r

dv

s

F

n

ds 0

} }

= =

c

c

since F = dU/dr = grad U, therefore div. grad U = 0

or U

U

x

U

y

U

z

V = + + =

2

2

2

2

2

2

2

0

c

c

c

c

c

c

Laplaces Equation

ii) Consider surface S encloses some attracting

matter

Figure 2/5: Surface enclosing some attracting matter

Fnds divFdv GM

v s

= =

} }

4t

where div is total flux crossing the surface S and is not

dependant on position of masses.

and M = Total Mass = m

1

+ m

2

+ m

3

( )

= GM r r GM /

2 2

4 4 t t

Thus can show that

V

2

U = div F = -4 t G ...........Poissons Equation

2.2 Implications of Laplaces and

Poissons equations

i. Interpretation of gravity (or magnetic fields) are

non-unique

Figure 2/6: Interpretation non uniqueness

Both masses give rise to the same gravity force field at

the surface. Thus need to use all geological information

available to control interpretation

ii. Use Laplaces Equation to obtain 2nd vertical

derivatives

V = + + =

2

2

2

2

2

2

2

0 U

U

x

U

y

U

z

c

c

c

c

c

c

c

c

c

c

c

c

2

2

2

2

2

2

U

z

U

x

U

y

=

For Gravity and Magnetics anomaly A

c c c

c c c

2 2 2

2 2 2

A A A

z x y

=

where A is the anomaly field and the horizontal

derivatives can be calculated from the map data.

iii. Upward or downward continuation of the field

The flux crossing a horizontal surface at different

heights will be the same. Since the flux is diverging

then the flux density is changing with height, which

causes an increase in wavelength and decrease in

amplitude of anomaly with height. This provides a

means of predicting the change in anomalies

(wavelength and amplitude) with distance from their

source e.g. upward continuation acts like a low pass

filter and downward continuation acts like an amplifier,

which also amplifies noise.

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 2, Page 3

GETECH/ Univ. of Leeds:

Figure 2/7: Upward continuation of the field.

Implications: Spectral content of anomaly

systematically changes with wavelength increasing

and amplitude and gradients decreasing with height.

This systematic change in spectrum is clearly seen

when anomalies resulting from sources at different

depths are visualised in terms of their Power Spectrum.

The example in Fig 2/8 is a dipole at 500m and 1000m

depth

Figure 2/8: Power |Spectrum of a dipoles at 500m

and 1000m depth.

The wavelength components making up an anomaly are

related to each other such that they form a linear

spectrum whose slope is a function of depth (steeper

the slope the deeper the top the body). These spectral

properties are utilised later in interpretation.

2.3 Variation of Density and Seismic

Velocity with Depth

(see Sheriff and Geldart. Exploration Seismology

data Processing and interpretation Vol 2)

Density of a rock depends directly upon the density of

the minerals making up the rock and the porosity.

Density variations play a significant role in velocity

variations with high density normally corresponding to

high velocity. (See Gardner et al., 1974) Gardner

introduced the relationship

4

1

aV =

where is in g/cc, V in m/s and a = 0.31

The density of the minerals making up sedimentary

rocks have a range 2.7 +/- 4% This density is close to

the density of Quartz 2.68 g/cc. A sedimentary rock e.g.

sandstone is mainly made up of Quartz but has a bulk

density of 2.4 +/- 10%. The difference is mainly due to

the effects of porosity. To understand what is going on

sedimentary rocks are either clastic or chemically

deposited. Clastic rocks are composed of fragments of

minerals, and thus have appreciable void space.

Chemically deposited rocks can be formed by

recrystalisation and/or the effects of percolating

solutions. The void spaces are usually filled with fluids

and the bulk density, , is given exactly by

= r +(1- ) m

where = porosity, r = fluid density and m = matrix

density

Seismic velocity is affected directly by the bulk density

and porosity since voids are volumes of low velocity

fluids. Thus density and velocity increase with depth as

rock compact, which reduces void space and drives out

the void fluids. The relation of Density and Velocity is

shown in Figure 2/9 and in Figure 2/10 as Density

verses transit time (normally given from well logs).

Figure 2/9: P wave velocity verses density relation

for different lithologies. The dashed line shows

constant acoustic impedance (kg/s m

2

x 10

6

). The

dotted line is

4

1

aV =

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 2, Page 4

GETECH/ Univ. of Leeds:

Figure 2/10: Formation density verses sonic transit

time. The effect on porosity is indicated by the

decreasing percentage value with increasing

compaction.

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 1

GETECH/ Univ. of Leeds:

SECTION 3: GRAVITY & MAGNETIC UNITS &

ROCK MAGNETISM

3.1 SI, cgs and Practical Units

The units used in the Petroleum industry have

traditionally been, and remain, a horrible mixture of :

Foot, pound, second (FPS)

Centimetre, gramme, second (CGS)

In these notes Practical units will be used

In an attempt to bring order to physical units the

Systeme Internationale d Unites (SI) has been

introduced. The system uses the metre, kilogram,

second and ampere as primary units. Where possible

the relation between Practical and SI units is given.

3.2 Gravity Units

i. Acceleration g

Working formula: F = G m

1

m

2

/r

2

where F = gm

1

, g = acceleration due to gravity

m

1

= mass in measuring system (gravity meter)

m

2

= mass of Earth and is function of rock density

r = radius of Earth - this is not a constant since there is

topography and latitude effects,

G = gravitational constant

Small g is the pull of, or acceleration due to, gravity and

is measured as follows

1 cm/s

2

= 1 Gal = 0.01 m/s

2

1 mGal = 10

-3

Gal = 10

-5

m/s

2

SI unit is 1 GU. = 10

-1

mGal = 10

-6

m/s

2

where Gal is named after Galileo (1564 - 1642)

and GU. is gravity unit

The mGal is the Practical unit in common use, whereas

the GU. is the SI Unit (Systeme International d' Unites)

(SI for g is m/s

2 ,

10

-3

milli, 10

-6

micro and 10

-9

nano)

Earths Gravity Field:

g

mean

for Earth 981000 mGal

so 1 mGal 10

-6

of g for Earth

gravity meters can read to 10

-9

( 0.001 scale divisions

for a LaCoste & Romberg meter).

In oil exploration, gravity variations of the order of

0.2 mGal (and less) can be important locally over a

structure with variations of 10's of mGals being

more common over sedimentary basins.

ii Gravity Gradients

Because practical units of acceleration for exploration

gravity surveys is the mGal, it makes sense to use

gradient units that are easily related to our unit of

convenience. For historical and practical reasons the

Eotvos is defined in terms of the Gravity Unit (GU)

1 E = 1 GU/km = 10

-9

s

-2

or 1 E = 0.1 microGal / m

or 1 E = 1 nGal / cm = 1 nanoGal / cm

iii. Density,

Figure 3/1: Range of sediment densities with depth

based on well log data

Density, , is a scalar quantity (has only magnitude and

no direction)

1 gram/cubic centimetre = 1 g/cc = 1000 kg/m

3

The SI unit is the kg/m

3

Practical unit is g/cc or g/cm

3

or g.cm

-3

Density of Sea Water is a fuction of salinity and

temperature but generally taken as 1.03 g/cc (Practical

unit) or 1030 kg/m

3

(SI unit)

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 2

GETECH/ Univ. of Leeds:

Density can be measured in a number of different ways

e.g. Dry, Saturated, Grain and Bulk density.

Density of sediments increases with depth due to:

(i) compaction (ii) lithification (iii) metamorphism

Figure 3/2: Range in bulk densities for various rock

types

3.3 Magnetic Units

These are more complex than gravity and have various

ways of defining them.

There are four fundamental terms that can be used to

describe how magnetised a material (or a region) is.

These are

B the magnetic induction;

H the magnetic field;

J the magnetic polarisation (or simply, the

magnetisation);

M the magnetic dipole moment per unit volume

These quantities are related in different ways in the two

systems (cgs and SI units) by the following equations:

cgs B = H + 4J

J = M

SI B = H +J

J = M

where = 4 x 10

-7

H/m is the permeability of free

space.

The above equations show that all four fundamental

terms have the same units in the cgs system. In the

cgs system, however, it has been customary to

designate B in terms of Gauss (G), H in Oersteds (Oe),

and J and M in electromagnetic units (emu) per cubic

centimetre. Incidentally, it has also been customary in

the cgs system to express the magnetisation of

materials in terms of the magnetic polarisation J and to

call this quantity the intensity of magnetisation, the

magnetisation per unit volume or simply the

magnetisation. Because J and M are the same in the

cgs system, this introduces no ambiguity. In SI units, B

and J are expressed in Tesla (T), while M and H are

expressed in amperes (A) per metre.

The conversion factors for the four terms are

(B) 1 T = 10

4

G

(H) 1 A/m = 4 x 10

-3

Oe

(J) 1 T = 10

4

/4 emu/cm

3

(M) 1 A/m = 10

-3

emu/cm

3

Note that the conversions for B and M involve only

powers of 10, while those for H and J involve factors of

4.

A fifth important term is used to describe how

magnetised an object may become under the influence

of a field. This is called the magnetic susceptibility (k),

for which the defining equations are

cgs M = J = kH

S M = J/ = kH

In both systems, susceptibility is a pure number. It

follows from the conversions given above that 1 cgs unit

of susceptibility equals 4 SI units of susceptibility.

For these study notes the following units and

terminology are generally used in exploration.

i. Geomagnetic Field Strength , T

Measured in terms of geomagnetic flux density B - all

exploration instruments measure this.

Gauss gamma nanoTesla Weber/m

2

1 G = 10 = 10

nT = 10

-4

Wb/m

2

The SI unit is nT

You may be more familiar with magnetic field given in

units of the Oersted (Oe) which is the magnetic intensity

H. Russian aeromagnetic maps were commonly

contoured in intervals of 1 mOe = 100nT

where 1 Oe = 10

3

/

4 A/m

H is computed in same way as B but is different in that

B is dependent on the permeability

0

of the material

where B

0

Relation between B & H is

B =

0

H + kH

where

0

is permeability and k is susceptibility

for space (vacuum)

B = H since k = 0

in air

B = H since = 1 & k = 0

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 3

GETECH/ Univ. of Leeds:

ii. Magnetisation J

(or magnetic moment/unit volume): This is a vector

magnetic property of a rock. Magnetisation J is often

quoted in electromagnetic units per unit volume (emu

cm

-3

) where 1 emu/cm

-3

= 10

3

A/m = 4 10

-4

Wb/m

2

(e.g. if k=0.0057 SI then k=0.00045 emu)

J = Ji + Jr

There are two components of Magnetisation:

Remanent Magnetisation (or Remanence) Jr

This is a natural property of some rocks, independent of

T, and is the magnetisation that remains if T is removed.

Jr direction is often the Earth's field direction at the time

of rock formation. Since rock deform and/or continental

movements may have occurred since the time of

formation the direction of magnetisation need not be the

same as the present direction of T

Induced Magnetisation Ji (where Ji = kT)

Since Ji & T are in nT then k (the susceptibility) is

dimensionless. The magnetic susceptibility of rocks is

normally almost entirely dependent on the volume

percentage of magnetite ( Fe O

3 4

) in the rock (Figure

3/3a, 3/3b).

Figure 3/3a: Magnetic susceptibility of rocks as

a function of magnetic mineral type and

content. Solid lines: Magnetite; thick line =

average trend, a = coarse-grained (>500 mm),

well crystallised magnetite, b = finer grained

(<20 mm) poorly crystallised, stressed, impure

magnetite. Broken lines: Pyrrhotite; thick line =

monoclinic pyrrhotite-bearing rocks, average

trend; a = coarser grained (>500 mm), well

crystallized pyrrhotite; b = finer grained (<20

mm) pyrrhotite (after Clark and Emerson 1991).

Figure 3/3b: Typical values of rock

susceptibility values (where 1 cgs = 4 SI units)

Magnetite: a fair approximation is

k =0.0025 x % magnetite or % Fe O

3 4

=400 x k

Pyrrhotite is the second most common source of

magnetic anomalies but the susceptibility is only about

one-tenth of magnetite

k =0.00025 x % Pyrrhotite or % po = 4000 x k

Ilmenite, maghemite and titanomagnetite are less

important contributors to magnetic anomalies

Ferromagnesian minerals (e.g. amphiboles and

pyroxenes) do not contribute to susceptibility or

remanence

Since mafic rocks usually contain more magnetite than

felsic rocks, their magnetic susceptibility are

correspondingly higher.

Total Magnetisation J

J is the vector sum of Jr & Ji

J = Jr + Ji

The SI unit of J is the A/m. Susceptibility k is

dimensionless but normally quoted as susceptibility per

unit volume.

Koenigsberger Ratio, Qn :

Remanence is often measured by the ratio of remanent

to induced magnetisation

Qn = Jr/Ji

Qn is not strongly lithology-dependent, but is more a

factor of age and metamorphic history

Old rocks (Precambrian Qn is generally less than 1)

often < 0.2 (see section 3.4.6) Here Ji dominates.

In Tertiary and younger rocks Qn may at least 3 and

possibly 10 or more indicating that remanence

dominates.

IMPORTANT: The range of susceptibility k are several

orders of magnitude greater than density .

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 4

GETECH/ Univ. of Leeds:

3.4 Rock Magnetism

(Notes provided by Dr Nicola Pressling)

Theoretical Background Atomic magnetic moments

are generated by the motion of electrons spinning about

their axes of rotation. Electron orbits are arranged in

shells and pairs of electrons with opposite spins cancel

each other out. Consequently, full electron shells will

have zero net magnetic moment and incomplete shells,

with unpaired electron spins, will have a net atomic

magnetic moment.

3.4.1 Induced and Remanent Magnetisation

The magnetic moment of an atom and any interaction

with surrounding atoms defines the three types of

magnetisation:

Diamagnetism (Not important in exploration)

Materials with no magnetic moment will still respond to

the presence of an external magnetic field. An

additional torque is exerted on the electrons causing

them to precess about the applied field direction and

induce a weak, negative magnetization that is

proportional to the applied field strength. Diamagnetism

vanishes when the applied field is removed and does

not depend on temperature.

Paramagnetism (Not important in exploration) In

materials with a net magnetic moment, thermal energy

favours random orientation of the unpaired spin.

However, an external magnetic field will exert an

aligning torque and induce a weak, positive magnetic

field that is proportional to the strength of the applied

field. Paramagnetism is inversely dependent on

temperature and also vanishes in the absence of an

applied field.

Ferromagnetism (Prime carrier type and thus

important in exploration) Atoms occupy fixed

positions in crystal lattices and electrons with unpaired

spins can be exchanged between neighbouring atoms.

The process requires large amounts of energy and

produces a strong spontaneous magnetisation orders of

magnitude greater than either dia- or paramagnetism in

the same external magnetising field. During thermal

expansion, inter-atomic distances increase and the

exchange coupling becomes weaker. The Curie

temperature, Tc, is defined as the temperature at which

the distance between atoms is such that they act

independently and paramagnetically; when thermal

energy overcomes magnetic energy. Tc is different for

different minerals and can be diagnostic. Ferromagnetic

materials retain a net magnetisation even after the

removal of the applied magnetic field.

Figure 3/4: Various types of spin alignment possible

in ferromagnetic materials. (a) Ferromagnetism

sensu stricto. (b) Anti-ferromagnetism. (c)

Ferrimagnetism. (d) Canted anti-ferromagnetism. (e)

Defect ferromagnetism.

The term ferromagnetism strictly applies only when

spins are aligned perfectly in parallel. However,

alignment of the unpaired electron spins can occur in a

variety of ways within a crystal lattice and also between

lattice layers (Figure 3/4). Anti-ferromagnetism is when

spins are anti-parallel and there is zero net magnetic

moment. When spins are antiparallel but not equal in

magnitude, the resulting net magnetic moment is

referred to as ferrimagnetic. A weak magnetic moment

is also generated in canted anti-ferromagnetic

materials, where anti-ferromagnetic spins are obliquely

aligned. Additionally, lattice defects in ferromagnetic

materials can displace the magnetic structure and

cause a net magnetic defect moment as a result of any

subsequent unpaired spins.

Hysteresis Loops: Ferromagnets exhibit hysteresis

properties changes in the magnetisation of a

ferromagnet lags behind any changes in the

magnetising field. Figure 3/5 shows a typical

ferromagnetic hysteresis loop. The magnetisation of the

Figure 3/5: Typical ferromagnetic hysteresis loop

illustrating the changes in the magnetisation of a

ferromagnet, M, lagging behind the changes in the

magnetising field, H. Saturation magnetisation, Ms,

remanent magnetisation, Mrs, coercivity, Hc, and

coercivity of remanence, Hcr, are defined in the text.

ferromagnet, M, increases as the applied strong

magnetic field, H, increases. When all the individual

magnetic moments are aligned with the applied field,

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 5

GETECH/ Univ. of Leeds:

the magnetisation is said to be saturated (Ms). A

remanent magnetisation, Mrs, remains after the removal

of the saturating applied field. Increasing the strong

magnetic field in the opposite direction progressively

remagnetises the material, cancelling out the original

remanence. The coercive force, Hc, is the reverse field

required to reduce the magnetic remanence to zero

whilst still in the presence of the reverse applied field.

The coercivity of remanence, Hcr, by comparison, is the

reverse field required to reduce the magnetic

remanence to zero after the removal of the reverse

applied field.

3.4.2 Magnetic Domain Theory

Ferromagnetic properties depend heavily on grain size

and shape, defining three magnetic domain regimes

(Figure 3/6: Single Domain (SD) SD grains are the ideal

magnetic remanence carriers, acting as isolated

Figure 3/6: Magnetic domains. (a) SD grain aligned

with the applied field; magnetostatic energy

accumulating on the grain surface creates a self-

demagnetising field. (b) Larger PSD grain. (c) Larger

still MD grain. (d) Domain wall with sequentially

rotating magnetic moments. (e) MD grain where the

walls have moved to enlarge the domain aligned

with the applied field compare to the MD grain in

(c).

magnetic dipoles. They are very stable, requiring a

strong magnetic field to rotate the entire magnetic

moment against the grain anisotropy. The theoretical

range of SD grain sizes is very narrow: from 0.030.1

m in equant grains and up to 1 m in elongated grains,

where magnetic anisotropy has a greater effect.

Multi-Domain (MD) As grain size increases it becomes

energetically favourable to divide a grain into a number

of uniformly magnetised regions or domains, thereby

reducing the magnetostatic (self-demagnetising) energy

at the surface of the grain by 1/N, where N is the

number of domains. Each region is separated by thin

(0.1 m) domain walls, within which the magnetic spins

sequentially rotate between the adjacent domain

directions. MD grains are less stable than SD grains as

it is energetically easier to move domain walls

compared to switching the magnetic moment of a whole

domain. The magnetisation of a MD grain is therefore

changed by enlarging domains with a specific magnetic

moment at the expense of any domains oppositely

magnetised. The number of domains in a grain depends

on factors such as grain size and shape, internal stress

and the number of defects present. True MD behaviour

should occur in grain sizes exceeding 15 m.

Pseudo-Single Domain (PSD) These grains are of

intermediate size and thus contain only a limited

number of domains. PSD grains essentially act like SD

grains as the movement of domain walls is restricted by

strong interactions at the grain surface.

3.4.3 Magnetic Mineralogy

The most important and most commonly occurring

ferromagnetic minerals are irontitanium (FeTi) oxides.

The various possible compositions of FeTi oxides are

displayed on the ternary diagram in Figure 3/7.

Compositions varying from bottom to top have

increasing Ti content and compositions varying from left

Figure 3/7: Ternary diagram illustrating the

composition of the iron-titanium oxides. Names and

compositions of the important minerals and solid-

solution series are labelled. Increasing oxidation is

in the direction of the dashed arrows. Colour

shading represents the variation in Tc with

composition: increasing Ti content decreases Tc;

increasing oxidation increases Tc. The Curie

temperatures, Tc, of the end-member minerals of

the two solid-solution series are noted explicitly.

to right have increasing ratios of ferric (Fe

3+

) to ferrous

(Fe

2+

) iron.

Titanomagnetite and titanohaematite are primary

crystallising phases in igneous rocks. In the

titanomagnetite solid-solution series (Fe3-xTixO4), Ms

and Tc both linearly depend on the composition, x,

decreasing with the addition of Ti

4+

which is relatively

larger than the Fe

2+

and Fe

3+

cations and has no net

magnetic moment. When x > 0.8, titanomagnetites

behave paramagnetically at room temperature. In the

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 6

GETECH/ Univ. of Leeds:

titanohaematites solid-solution series (Fe2-xTixO3), Tc

also decreases linearly with increasing Ti

4+

content.

However, the mode of ferromagnetism varies

significantly with composition: when 0 < x < 0.45 the

magnetism is the canted anti-ferromagnetism of

haematite; when 0.45 < x < 0.8 the Ti and Fe are no

longer equally distributed and the magnetism is

ferrimagnetic; above x = 0.8 titanohaematite behaves

paramagnetically.

High temperature oxidation High temperature

oxidation is also known as deuteric oxidation. This

process occurs during initial cooling at temperatures

above Tc and when there is enough oxygen present in

the melt. The composition moves to the right in the

ternary diagram. However, the resulting grains are often

not homogeneous, but instead composite grains, for

example, ilmenite lamellae in a titanomagnetite host.

Movement along the dashed arrows in Figure 3/7

reflects this oxidation process: primary titanomagnetite

of intermediate composition is replaced by Fe-rich

titanomagnetite, which results in increased Tc and Ms.

In addition, the grain size of the oxidation product is

reduced by the introduction of lamellae that break-up

the original grain matrix. Deuteric oxidation almost

always occurs unless the lava has been quenched or

cooled very rapidly. The extent of its effect depends on

the cooling rate and oxygen fugacity; end-member

products being rutile, pseudobrookite or haematite.

Low temperature oxidation Also known as

maghaematisation. This process occurs at lower

temperatures, generally < 200?C, and is caused by

processes such as weathering or hydrothermal

circulation. Titanomagnetite alters into

titanomaghaematite, a cation-deficient product from the

diffusion of Fe out of the rock. Maghaemite is

metastable and inverts with time and temperature to the

more compact, but chemically equivalent, structure of

haematite. Inversion to haematite has the affect of

decreasing Ms and increasing Tc.

3.4.4 Lava as a Recording Media

It is the small proportion of ferromagnetic minerals

present in some rocks that record ambient magnetic

fields. However, if the applied, ambient magnetic field is

removed, the net magnetisation will eventually decay to

zero by the relation

M(t) = M0 exp_(-t/r) (1.1)

where M0 is the initial magnetisation, t is time and r is

the relaxation constant, defined empirically as the time

for the remanence to decay to 1/e of its initial value.r is

inversely proportional to temperature and proportional to

coercivity and grain volume. The blocking temperature,

TB, of a grain is the point below which r is large and the

magnetisation can be considered stable. At one

extreme, r can be unstable on laboratory timescales of

the order 10

2

10

3

seconds. So-called

superparamagnetic (SP) grains will rapidly become

random in the absence of an applied field, effectively

behaving like paramagnets. At the other extreme, r can

be stable on the order of 10

9

years and retain

information on the geomagnetic field over geological

timescales.

The natural remanent magnetisation (NRM) carried by a

rock may be composed of several different magnetic

components acquired at different times during its

history. The primary component is that acquired at the

time of initial formation. Secondary components are any

remanence acquired post-formation. The primary NRM

component in lavas is thermal remanent magnetisation

(TRM); the magnetisation acquired as the lava cools

and solidifies, held by grains with TB < Tc.

3.4.5 Sources of Secondary Magnetisation

Secondary remanent magnetisations overprint the

primary component, masking information about the

geomagnetic field recorded instantaneously at the time

of formation. However, since secondary components are

acquired at different times and usually in different

magnetic conditions, they can often be identified and

separated. The various sources of secondary remanent

magnetisation include:

Secondary TRM Reheating to elevated temperatures

can reset the remanent magnetization in grains with low

TB. For example, secondary TRM could occur in baked

margins, close to dyke intrusions.

Chemical Remanent Magnetisation (CRM) Chemical

reactions can form new ferromagnetic minerals, or

cause phase changes in existing ones. For example,

new minerals that are the product of oxidation or

exsolution will acquire a CRM describing the magnetic

field present during their growth.

Drilling Induced Remanent Magnetisation (DIRM)

The heat and motion generated by drilling can result in

the alignment of magnetic moments parallel to the

drilling direction. This causes a diagnostic bias in the

original NRM direction.

Viscous Remanent Magnetisation (VRM) VRM is

acquired in low coercivity grains by exposure to weak

fields over long time periods at constant temperatures.

VRM is often aligned with the present day geomagnetic

field or a laboratory field, i.e. the most recent field to

which the rock was exposed.

Isothermal Remanent Magnetisation (IRM) This form

of secondary magnetisation results from short-term

exposure to strong magnetic fields at a constant

temperature, such as magnetic fields generated in the

vicinity of a lightning strike in the field, or an

electromagnet in the laboratory. IRM has the capacity to

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 7

GETECH/ Univ. of Leeds:

affect all magnetic grains and completely eradicate any

primary magnetic remanence.

Detrital Remanent Magnetisation (DRM) DRM refers

to the combination of depositional and post-depositional

magnetisation processes in sedimentary rocks.

Alignment of magnetic minerals occurs during sediment

deposition, but can be modified by bioturbation and

compaction before consolidation.

3.4.6 Summary of Magnetic Properties of

Common Crustal Rocks (after Reeves, 2005

Aeromagnetic Surveys, Principles, Practice &

Interpretation, Geosoft website)

(a) Sedimentary rocks can be considered as non-

magnetic or very weakly magnetic. This is the basis for

many applications of aeromagnetic surveying in that the

interpretation of survey data assumes that magnetic

sources must lie below the base of the sedimentary

sequence. This allows rapid identification of hidden

sedimentary basins in petroleum exploration. The

thickness of the sedimentary sequence may be mapped

by systematically determining the depths of the

magnetic sources (the magnetic basement) over the

survey area. Exceptions that may cause difficulties with

this assumption are certain sedimentary iron deposits,

volcanic or pyroclastic sequences concealed within a

sedimentary sequence and dykes and sills emplaced

into the sediments.

(b) Metamorphic rocks probably make up the largest

part of the earth's magnetic crust shallower than the

Currie isotherm and have a wide range of magnetic

susceptibilities. These often combine, in practice, to

give complex patterns of magnetic anomalies over

areas of exposed metamorphic terrain. Itabiritic rocks

tend to produce the largest anomalies, followed by

metabasic bodies, whereas felsic areas of

granitic/gneissic terrain often show a plethora of low

amplitude anomalies imposed on a relatively smooth

background field.

Processes of metamorphism can be shown to radically

effect magnetite and hence the magnetic susceptibility

of rocks. Serpentinisation, for example, usually creates

substantial quantities of magnetite and hence

serpentinised ultramafic rocks commonly have high

susceptibility (Figure 3/10). However, prograde

metamorphism of serpentinised ultramafic rocks causes

substitution of Mg and Al into the magnetite, eventually

shifting the composition into the paramagnetic field at

granulite grade. Retrograde metamorphism of such rock

can produce a magnetic rock again. Other factors

include whether pressure, temperature and composition

conditions favour crystallisation of magnetite or ilmenite

in the solidification of an igneous rock and hence, for

example, the production of S-type or I-type granites.

The iron content of a sediment and the ambient redox

conditions during deposition and diagenesis can be

shown to influence the capacity of a rock to develop

secondary magnetite during metamorphism.

(c ) Fracture Zones Oxidation in fracture zones during

the weathering process commonly leads to the

destruction of magnetite which often allows such zones

to be picked out on magnetic anomaly maps as narrow

zones with markedly less magnetic variation than in the

surrounding rock. A further consideration is that the

original distribution of magnetite in a sedimentary rock

may be largely unchanged when that rock undergoes

metamorphism, in which case a 'paleolithology' may be

preserved - and detected by magnetic surveying. This is

a good example of how magnetic surveys can call

attention to features of geological significance that are

not immediately evident to the field geologist but which

can be verified in the field upon closer investigation.

(d) Igneous and plutonic rocks. Igneous rocks also

show a wide range of magnetic properties.

Homogeneous granitic plutons can be weakly magnetic

often conspicuously featureless in comparison with

the magnetic signature of their surrounding rocks - but

this is by no means universal. In relatively recent years,

two distinct categories of granitoids have been

recognised: the magnetite-series (ferrimagnetic) and the

ilmentiteseries (paramagnetic). This classification has

important petrogenetic and metallogenic implications

and gives a new role to magnetic mapping of granites,

both in airborne surveys and in the use of susceptibility

meters on granite outcrops. Mafic plutons and lopoliths

may be highly magnetic, but examples are also

recorded where they are virtually nonmagnetic. They

generally have low Q values as a result of coarse grain

size. Remanent magnetisation can equally be very high

where pyrrhotite is present.

(e) Hypabyssal rocks. Dykes and sills of a mafic

composition often have a strong, remanent

magnetisation due to rapid cooling. On aeromagnetic

maps they often produce the clearest anomalies which

cut discordantly across all older rocks in the terrain.

Dykes and dyke swarms may often be traced for

hundreds of kilometres on aeromagnetic maps - which

are arguably the most effective means of mapping their

spatial geometry. Some dyke materials have been

shown to be intrinsically non-magnetic, but strong

magnetic anomalies can still arise from the contact

auriole of the encasing baked country rock. An

enigmatic feature of dyke anomalies is the consistent

shape of their anomaly along strike lengths of hundreds

of kilometres, often showing a consistent direction of

remanent magnetisation. Carbonatitic complexes often

produce pronounced magnetic anomalies.

(f) Banded iron formations/itabirites. Banded iron

formations can be so highly magnetic that they can be

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 8

GETECH/ Univ. of Leeds:

unequivocally identified on aeromagnetic maps.

Anomalies recorded in central Brazil, for example,

exceed 50000 nT, in an area where the earth's total field

is only 23000 nT. Less magnetic examples may be

confused with mafic or ultramafic complexes.

(g) Ore bodies. Certain ore bodies can be significantly

magnetic, even though the magnetic carriers are

entirely amongst the gangue minerals. In such a case

the association with magnetic minerals may be used as

a path-finder for the ore through magnetic survey. In

general, however, the direct detection of magnetic ores

is only to be expected in the most detailed

aeromagnetic surveys since magnetic ore bodies form

such a very small part of the rocks to be expected in a

survey area.

In an important and pioneering study of the magnetic

properties of rocks, almost 30 000 specimens were

collected from northern Norway, Sweden and Finland

and measured for density, magnetic susceptibility and

NRM (Henkel, 1991). Figure 3/8 shows the frequency

distribution of magnetic susceptibility against density for

the Precambrian rocks of this area. It is seen here that

whereas density varies continuously between 2.55 and

3.10 t/m

3

, the distribution of magnetic susceptibilities is

distinctly bimodal. The cluster with the lower

Figure 3/8: Frequency distribution of 30 000

Precambrian rock samples from northern

Scandinavia tested for density and magnetic

susceptibility (after Henkel 1991).

susceptibility is essentially paramagnetic ('non-

magnetic'), peaking at k = 2 x 10-4 SI, whereas the

higher cluster peaks at about k=10-2 SI and is

ferrimagnetic. The bimodal distribution appears to be

somewhat independent of major rock lithology and so

gives rise to a typically banded and complex pattern of

magnetic anomalies over the Fenno-Scandian shield.

This may be an important factor in the success of

magnetic surveys in tracing structure in metamorphic

areas generally, but does not encourage the

identification of specific anomalies with lithological units.

It should be noted from Figure 3/8 that, as basicity (and

therefore density) increases within both clusters, there

is a slight tendency for magnetic susceptibility also to

increase. However, many felsic rocks are just as

magnetic as the average for mafic rocks - and some

very mafic rocks in the lower susceptibility cluster are

effectively non-magnetic.

Figure 3/9 shows the relationship between magnetic

susceptibility and Koenigsberger ratio, Q determined in

the same study. The simplifying assumption, often

forced upon the aeromagnetic interpreter, that

magnetisation is entirely induced (and therefore in the

direction of the present-day field) gains some support

from this study where the average Q for the

Scandinavian rocks is only 0.2

The possible effects of metamorphism on the magnetic

properties of some rocks are illustrated in Figure 3/10.

The form of the figure is the same as Figure 3/8 and

attempts to divide igneous and metamorphic rocks

according to their density and susceptibility. Two

processes are illustrated. First, the serpentinisation of

olivine turns an essentially non-magnetic but ultramafic

rock into a very strongly magnetic one; serpentinites are

among the most magnetic rock types commonly

encountered. Second, the destruction of magnetite

through oxidation to maghemite can convert a rather

highly magnetic rock into a much less magnetic one.

Figure 3/9: Frequency distribution of 30 000

Precambrian rock samples from northern

Scandinavia: Koenigsberger ratio versus magnetic

susceptibility (after Henkel 1991).

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 9

GETECH/ Univ. of Leeds:

Figure 3/10: Some effects of metamorphism on rock

magnetism. In blue, the serpetinisation of olivine

(blue) turns an ultramafic but non-magnetic rock

into a highly magnetic one. In red, the oxidation of

magnetite to maghemite reduces magnetic

susceptibility by two orders of magnitude.

3.5 Methods of measuring magnetic

properties

3.5.1 In the laboratory and at outcrops.

A 'susceptibility meter' may be used on handspecimens

or drill-cores to measure magnetic susceptibility (Clark

and Emerson, 1991). This apparatus may also be used

in the field to make measurements on specimens in situ

at outcrops. Owing to the wide variations in magnetic

properties over short distances, even within one rock

unit, such measurements tend to be of limited

quantitative value unless extremely large numbers of

measurements are made in a systematic way.

3.5.2 In a drillhole.

Susceptibility logging and magnetometer profiling are

both possible within a drillhole and may provide useful

information on the magnetic parameters of the rocks

penetrated by the hole.

3.5.3 From aeromagnetic survey interpretation.

The results of aeromagnetic surveys may be 'inverted'

in several ways to provide quantitative estimates of

magnetic susceptibilities, both in terms of 'pseudo-

susceptibility maps' of exposed metamorphic and

igneous terrain, and in terms of the susceptibility or

magnetisation of a specific magnetic body below the

ground surface (see Section 27.4).

.

3.6.4 Paleomagnetic and rock magnetic

measurements.

The role of paleomagnetic observations has been

important in the unravelling of earth history. Methods

depend on the collection of oriented specimens - often

short cores drilled on outcrops with a portable drill.

Studies concentrate on the direction as well as the

magnitude of the NRM. Progressive removal of the

NRM by either AC demagnetisation or by heating to

progressively higher temperatures (thermal

demagnetisation) can serve to investigate the

metamorphic history of the rock and the direction of the

geomagnetic field at various stages of this history.

Components encountered may vary from a soft, viscous

component (VRM) oriented in the direction of the

present day field, to a 'hard' component acquired at the

time of cooling which can only be destroyed as the

Curie point is passed. Under favourable circumstances

other paleo-pole directions may be preserved from

intermediate metamorphic episodes. Accurate

radiometric dating of the same specimens vastly

increases the value of such observations to

understanding the geologic history of the site. The

reader is referred to McElhinny and McFadden (2000)

for further information on these methods.

3.6 References /Further Reading

Clark, D A (1997) Magnetic petrophysics and

magnetic petrology: aids to geological

interpretationof magnetic surveys. AGSO Journal of

Austarlian Geol & Geophys 17(2) 83-103

Clark, D.A., and Emerson, D.W., (1991). Notes on

rock magnetisation in applied geophysical studies.

Exploration Geophysics vol 22, No.4, pp 547-555.

Hanneson, J E (2003) On the use of magnetic and

gravity to discriminate between gabro and iron-rich

ore forming systems. ASEG 16 th Adelaide

Extended Abstracts

Henkel, H., (1991). Petrophysical properties (density

and magnetization) of rocks from the northern part

of the Baltic Shield. Tectonophysics, vol 192, pp 1-

19.

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 3, Page 10

GETECH/ Univ. of Leeds:

Butler, R.(1992) Paleomagnetism. Blackwell

Scientific Publications,.

Dunlop, D. and Ozdemir, O. (1997) Rock

Magnetism: Fundamentals and frontiers. Cambridge

University Press,.

McElhinny, M.W., and McFadden, P.L., (2000).

Paleomagnetism continents and oceans.

Academic Press, 386 pp.

Tauxe, L. (2002) Paleomagnetic Principles and

Practice. Kluwer Academic Publishers,

J D Fairhead, Potential Field methods for Oil & Mineral Exploration

GETECH/ Univ. of Leeds:

GRAVITY

Section 4 Gravity Anomalies

Section 5 GPS in Gravity Surveys (Land, Marine & Air)

Section 6 Land Gravity Data: Acquisition and Processing

Section 7 Marine Gravity Data: Acquisition & Processing

Section 8 Airborne Gravity Data: Acquisition & Processing

Section 9 Gravity Gradiometer Data

Section 10 Satellite Altimeter Gravity Data: Acquisition & Processing

Section 11 Global Gravity Data & Models

Section 12 Advances in Gravity Survey Resolution

J D Fairhead, Potential Field methods for Oil & Mineral Exploration

GETECH/ Univ. of Leeds:

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 4, Page 1

GETECH/ Univ. of Leeds:

SECTION 4: GRAVITY ANOMALIES

4.1 Gravitational Potential Energy, U

Potential Energy U. The acceleration due to gravity, g,

is generally referred to being the first vertical derivative

(gradient) of the potential energy

Figure 4/1: Potential energy surfaces and gravity

response

g = - dU/dr

where g is acceleration due to gravity, U the potential

energy and r the radial distance. (more details see

Section 2)

Concept of Equipotential Surface: This is a

continuous surface that is everywhere perpendicular to

lines of force. No work is done against the field when

moving along such a surface. Mean sea level is an

equipotential surface with respect to gravity. For the

Earth there is an infinite set of equipotential surfaces

surrounding the Earth, with mean sea level being but

one. As you go away from the Earth the equipotential

surface becomes smoother due to divergence of the

gravitational field with height (see Figure 2/7).

The equipotential surface is a smooth surface. If a ball

was placed on this surface (assuming surface to be

physical surface) the ball would stay where it was put. If

the surface is in space around the Earth and the ball is

pushed then the ball will continue to move at a constant

velocity along this surface without stopping. This is how

satellites move around the Earth, since above ~500km

altitude there is little or no atmospheric drag to slow

down the satellite. There are an infinite number of

equipotential surfaces (4 shown in Figure 4/1) on which

a satellite can travel; each higher surface is slightly

smoother than its neighbor. The surface becomes

smoother due to the divergence of the gravity field (see

Section 2, figure 2/7). The geiod surface is an

equipotential surface (height measured in metres) that

represents the mean sea level surface or mean

ellipsoidal shape of the Earth.

Note: the gravity field on an equipotential surface is not

constant since g is the perpendicular gradient across

the surface which varies spatially (see Figure 4/1). A

high density structure on the earths surface will

generate a positive correlation with the equipotential

surface as well as its derived gravity (or gradient across

the equipotential surface.

The Geoid: This is the sea-level equipotential surface

which can be measured in marine areas by satellite

radar altimeters (see Section 11) after correcting the

surface heights for transient effects (tides, currents,

wind, temperature variations). An example of the geiod

is shown in figure 4/2 for an area centred on the Gulf of

Thailand.

Figure 4/2: Geoid surface over SE Asia. Resolution

over marine areas good from satellite radar altimetry

whereas for land areas the geoid is derived from the

much sparser coverage of gravity measurements.

Resolution of the equipotential surface is being

improved all the time by new satellite data (GOCE

Section 11) and new and improved coverage of gravity

data.

4.2 Gravity (g) dependence

The observed pull of gravity g is a function of

g =function ( , r, V, lat, ht, topo , time)

Geology controls

1 & 2 , r density & distance of subsurface

mass

distribution from point of

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 4, Page 2

GETECH/ Univ. of Leeds:

measurement

3 V rock volume of the mass distribution

Other controls

4 lat Latitude - position on Earth's surface

5 ht height - height above or below sea

level which is used as reference

height

6 topo topography surrounding measurement

site

7 time e.g. lunar

If we did not correct for items 4 - 7 then we would be

unable to use gravity data to investigate items 1 - 3

since the gravity effects of items 4 - 7 are generally

much larger than items 1 - 3.

There are various ways of correcting (reducing or

processing) gravity data for items 4 - 7 and the resulting

variation in gravity are called:

Equipotentail U (see Section 4.1)

Free air Anomaly

Bouguer Anomaly

Isostatic Anomaly

Decompensative Anomaly

These anomaly types are inter-related. See below and

Sections 6, 7, & 8 for more details for each anomaly

type

4.3 Free Air Anomaly (FAA)

FAA = g

obs

- g

th

+ Free air correction (FAC)

where

g

obs

= vertical component of gravity measured with

gravity meter.

g

th

= theoretical or normal value of gravity at sea level

at measuring site (sometimes called latitude correction).

This correction removes the major component of

gravity leaving only local effects (see later)

FAC = corrects for height above sea level

= (0.3086 mGals per metre)

(for airborne gravity a more correction is used see

Section 8.4.6)

Since gravity decreases with distance

2

from centre of

Earth and g

obs

is measured at various heights above

sea level along a profile, then there is a need to reduce

the data to a common reference surface (datum).

Height Reference Datum is normally taken as mean

sea level, but can be any defined height e.g. lowest

point in survey area

FAA = g

obs

- g

th

+ 0.3086 h mGals

where h is measured in metres

There are two ways of viewing data processing. The first

is to wrongly assume you are moving all

measurements to the sea level datum. The correct way

to view the correction is that it is being applied at the

observation location.

FAA = g

obs

- g

th

+ 0.3086 h moving g

obs

to sea

level (wrong way to consider this correction)

FAA = g

obs

(g

th

- 0.3086 h) correcting at g

obs

(correct way to consider this correction)

Both equations the same just different emphasise. To

move g

obs

to another height needs upward or

downward continuation of the field.

In Fig 4/3 The Free air correction appears to over

corrected g

obs

. This is due to not taking into account

the gravity effect of the rock mass between the

measurement site and sea level datum. Thus the free

air anomaly is not normally used for land based gravity

studies. Its main use is at sea (see later)

Gravity Reference Datum: since gravity meters are

relative measuring instruments their values need to be

tied and adjusted to an international network of known

gravity values called the IGSN71 (see later)

Assumption Made to Generate FAA

- no assumptions made

- FAA strongly influenced by topography/bathymetry

- the FAA at long wavelengths varies about zero due to

isostatic processes

Implications of FAA on exploration

- not used generally for land based surveys

- more generally used in marine surveys where water

layer/bathymetry used as first layer of model (this layer

can be 2D or 3D)

4.4 Bouguer Anomaly (BA)

BA = g

obs

- g

th

+ 0.3086h - Bouguer Correction

Thus the BA equation is simply the FAA with an

additional correction called the Bouguer Correction.

i.e. BA = FAA - Bouguer Correction (BC)

J D Fairhead, Potential Field methods for Oil & Mineral Exploration Section 4, Page 3

GETECH/ Univ. of Leeds:

Figure 4/3: Relations between topography , Observed gravity, Free air anomaly, latitude correction & Bouguer

anomaly. Note g

obs

shows negative correlation with topography and FAA gives positive correlation with

topography

The Bouguer Correction corrects for the rock mass

between the measuring site and height datum (sea

level). The correction assumes the rock to be a flat

infinite slab of thickness h (metres) and constant density

r (g/cc). This is not strictly true since the top of the

infinite slab is the topography.

BC =2tGh = 0.04191h mGal

Where G = Grav. Constant = 6.672 x10

-11

N.m

2

.kg

-2

In Fig 4/3 the Bouguer Correction has successfully

removed the main effects of topographic correlation.

The equation for the Bouguer anomaly where no terrain

correction is applied is called the Simple Bouguer

Anomaly (SBA), where

SBA = g

obs

- g

th

+ 0.3086h - 0.04191 h

SBA = g

obs

(g

th

- 0.3086h + 0.04191 h)

or when terrain corrections are applied it is called the

Complete Bouguer Anomaly (CBA), where

CBA = Simple Bouguer Anomaly + Ter Cor

Always define COMPLETE (e.g. out to 22 km )